Volume 26, Issue 4 (Winter 2026)

jrehab 2026, 26(4): 490-509 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Taheri Afshar M, Eshaghzadeh M, Nadi A, Poursadeghian M, Rajabzadeh M. The Ethical Dilemmas of Artificial Intelligence In Nursing: A Systematic Review. jrehab 2026; 26 (4) :490-509

URL: http://rehabilitationj.uswr.ac.ir/article-1-3626-en.html

URL: http://rehabilitationj.uswr.ac.ir/article-1-3626-en.html

Mozhdeh Taheri Afshar1

, Maliheh Eshaghzadeh2

, Maliheh Eshaghzadeh2

, Amirhossein Nadi3

, Amirhossein Nadi3

, Mohsen Poursadeghian *4

, Mohsen Poursadeghian *4

, Mostafa Rajabzadeh5

, Mostafa Rajabzadeh5

, Maliheh Eshaghzadeh2

, Maliheh Eshaghzadeh2

, Amirhossein Nadi3

, Amirhossein Nadi3

, Mohsen Poursadeghian *4

, Mohsen Poursadeghian *4

, Mostafa Rajabzadeh5

, Mostafa Rajabzadeh5

1- Student Research committee, Ramsar Fatemeh Zahra School of Nursing and Midwifery, Health Research Institude, Babol University of Medical Sciences, Babo, Iran.

2- Department of Nursing, School of Nursing and Midwifery, Torbat Heydariyeh University of Medical Sciences, Torbat Heydariyeh, Iran.

3- Department of Nursing, Student Research Committee, Torbat Heydariyeh University of Medical Sciences, Torbat Heydariyeh, Iran.

4- Social Determinants of Health Research Center, Ardabil University of Medical Sciences, Ardabil, Iran. ,poursadeghiyan@gmail.com

5- Student Research Committee , School of Midwifery Nursing, Torbat-e Heydariyeh University of Medical Sciences, Torbat Heydariyeh , Iran.

2- Department of Nursing, School of Nursing and Midwifery, Torbat Heydariyeh University of Medical Sciences, Torbat Heydariyeh, Iran.

3- Department of Nursing, Student Research Committee, Torbat Heydariyeh University of Medical Sciences, Torbat Heydariyeh, Iran.

4- Social Determinants of Health Research Center, Ardabil University of Medical Sciences, Ardabil, Iran. ,

5- Student Research Committee , School of Midwifery Nursing, Torbat-e Heydariyeh University of Medical Sciences, Torbat Heydariyeh , Iran.

Full-Text [PDF 3847 kb]

(102 Downloads)

| Abstract (HTML) (2188 Views)

Tabulation and Summarization of Information and Data

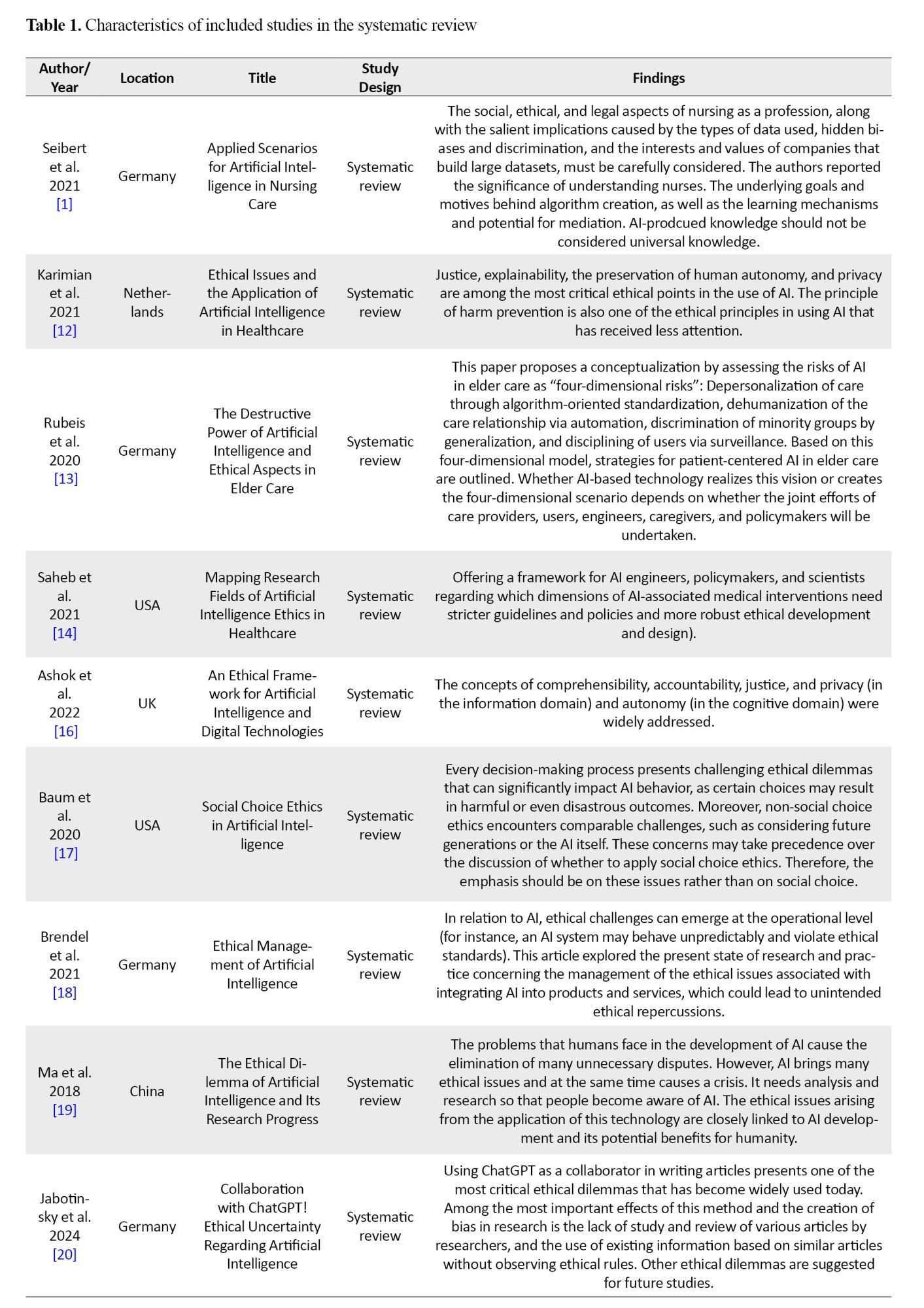

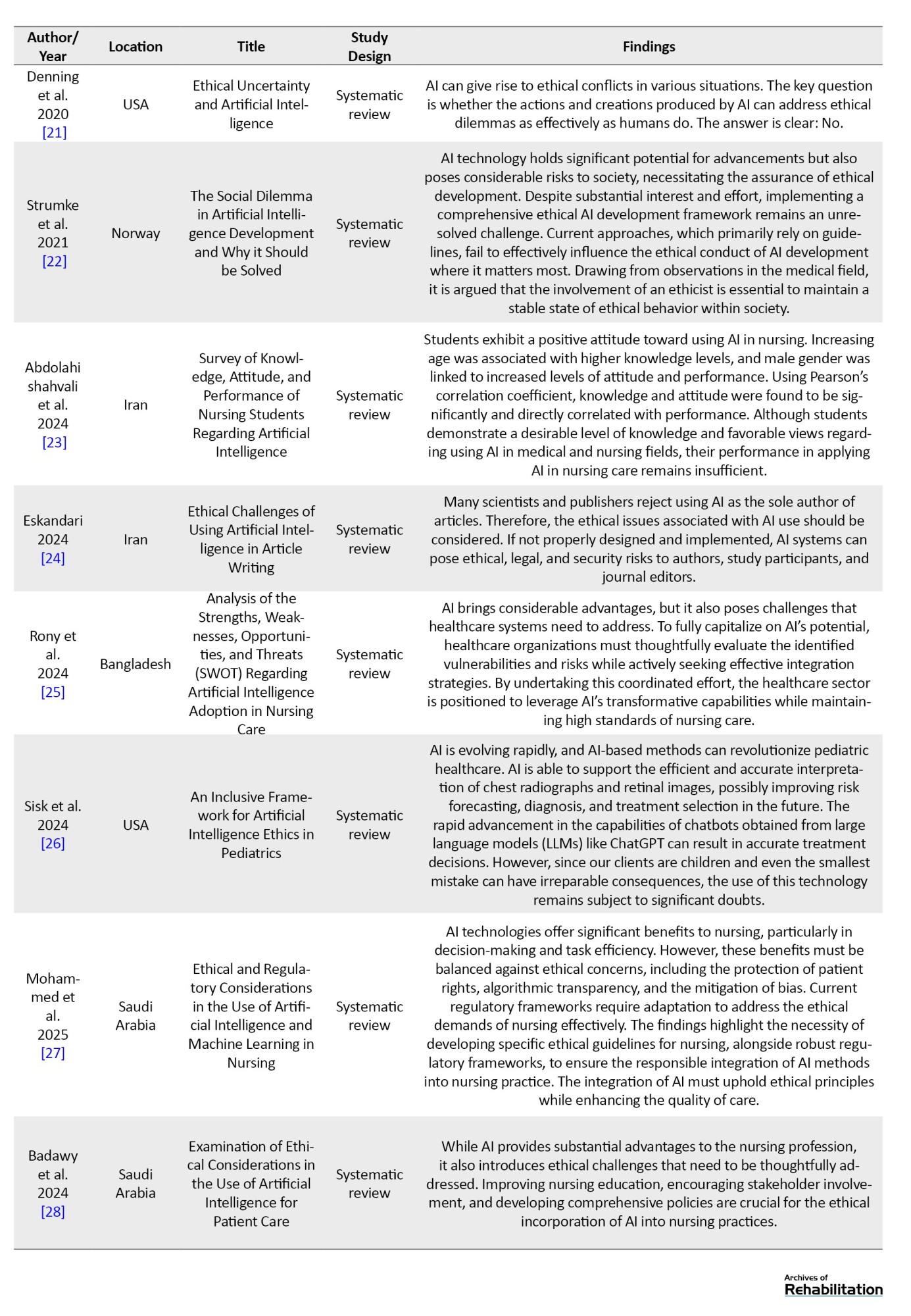

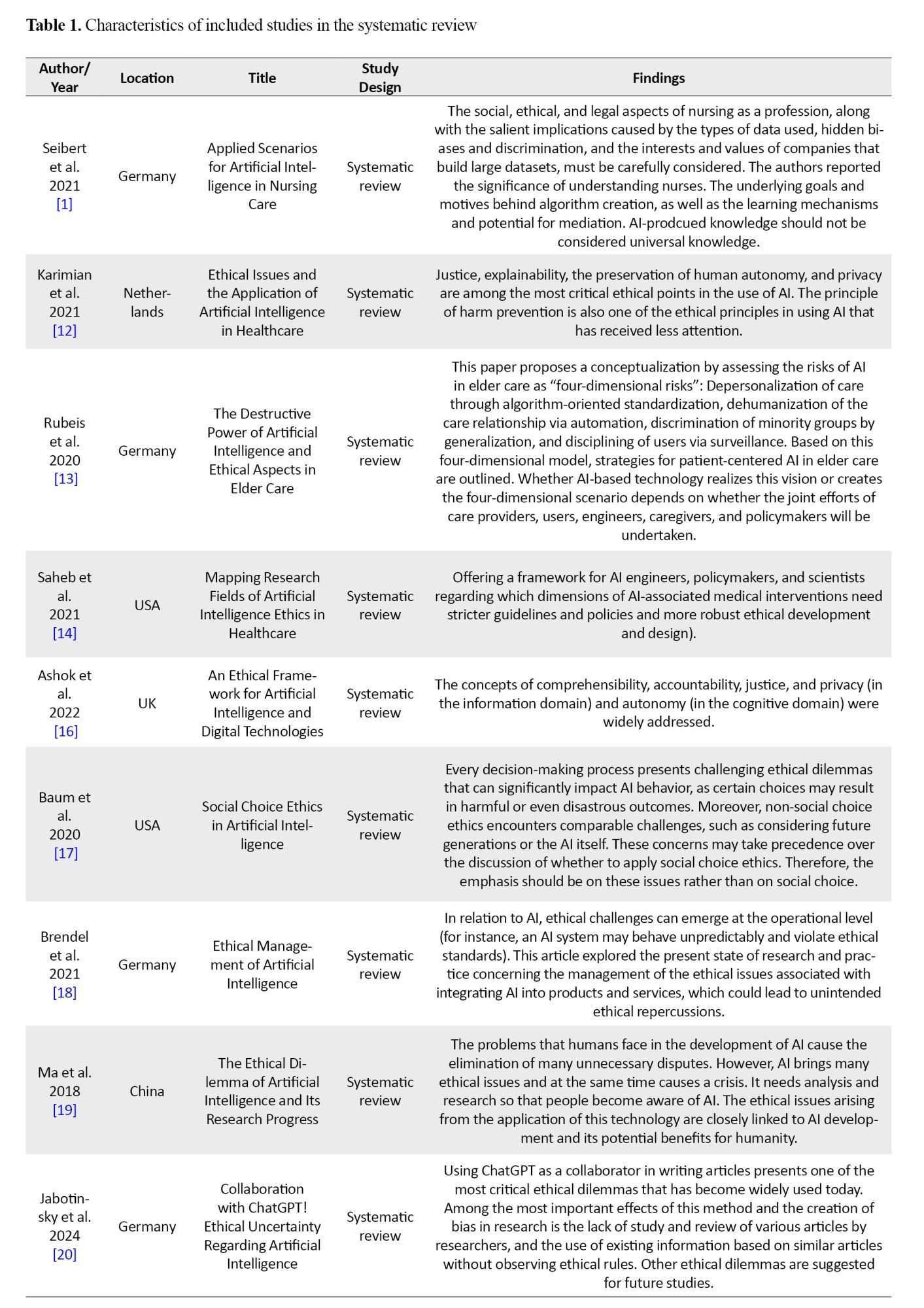

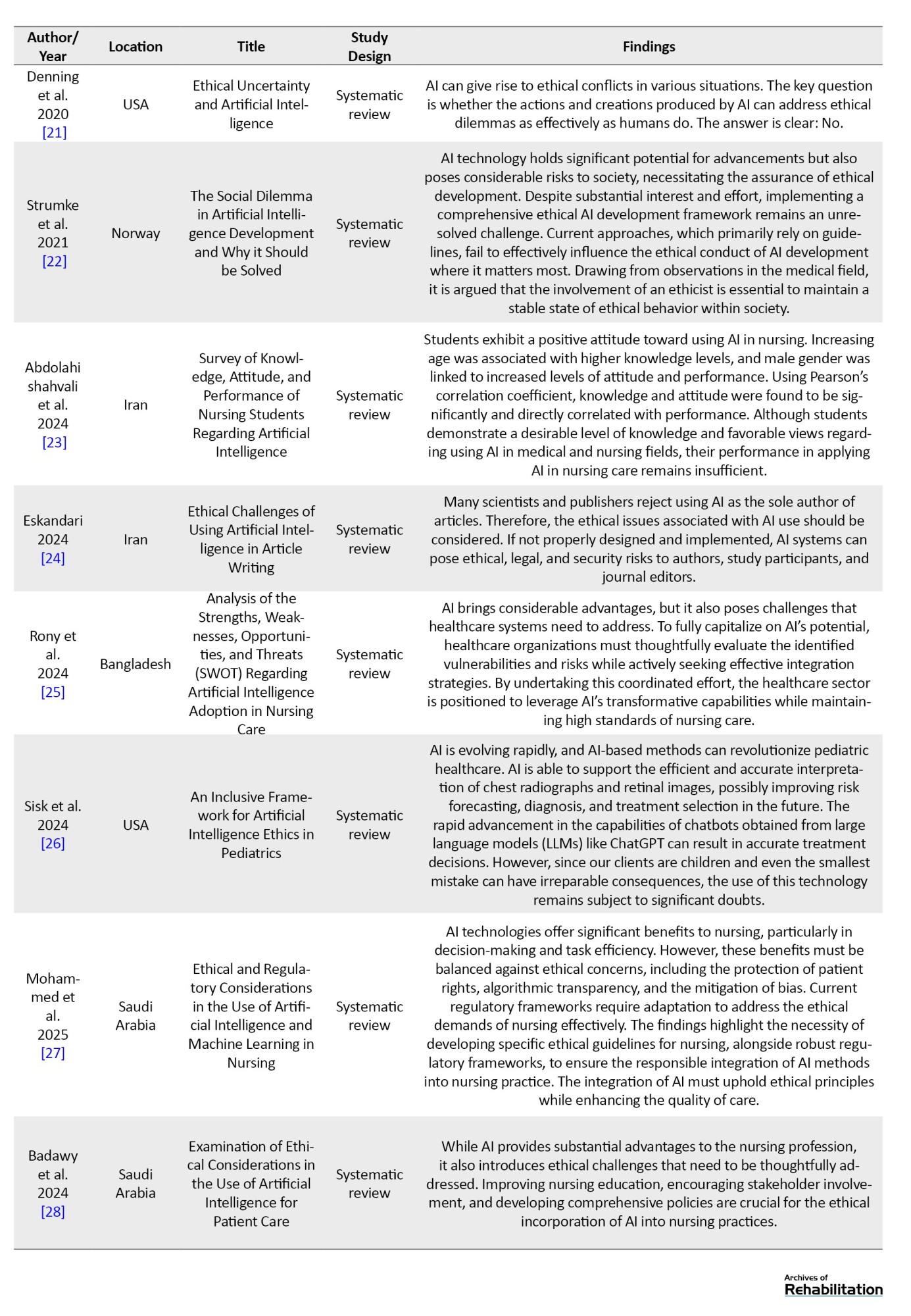

In this stage, after data extraction, all study data—including the first author’s name, location, title, year of publication, and findings—were summarized in Table 1.

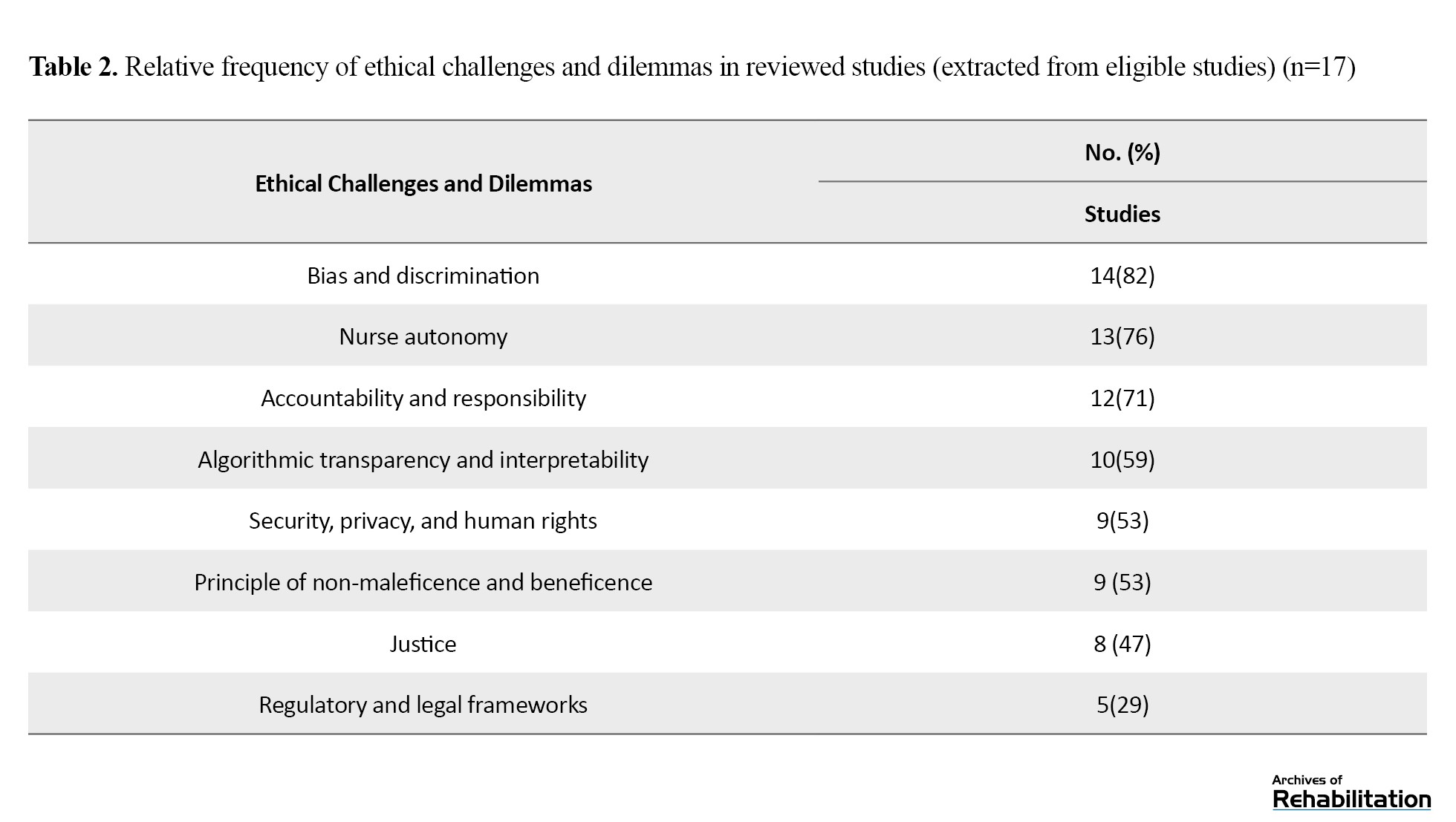

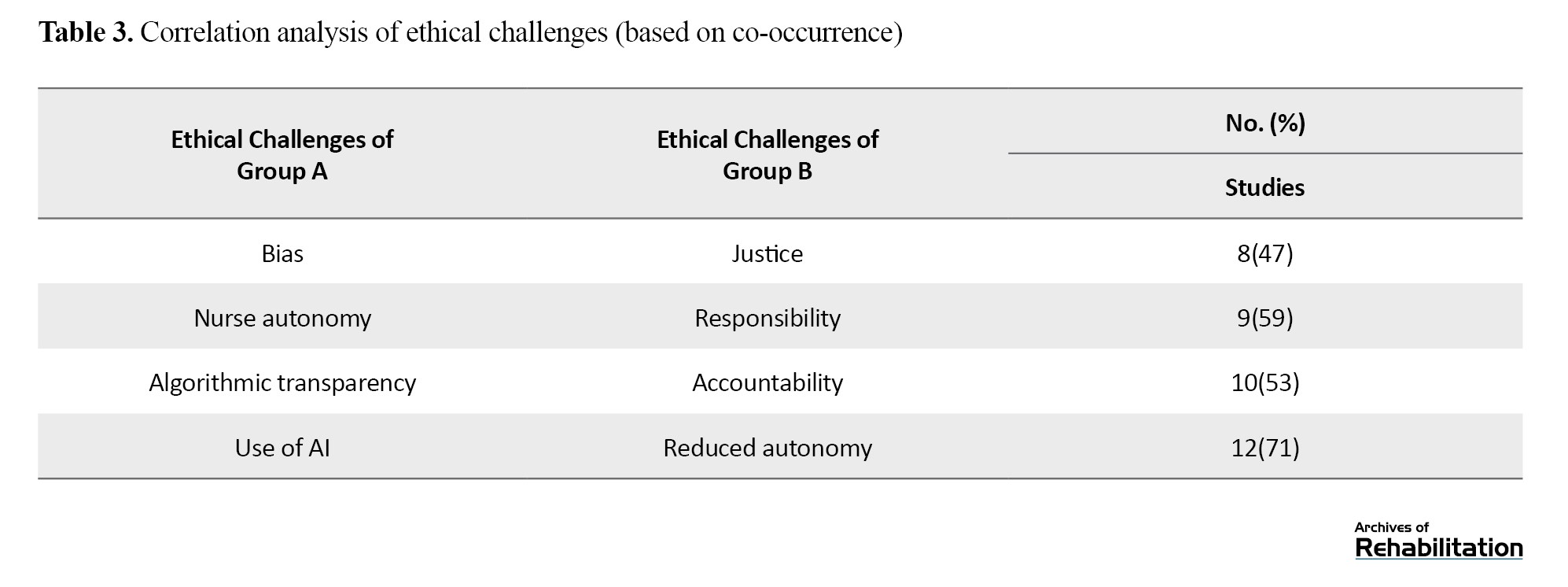

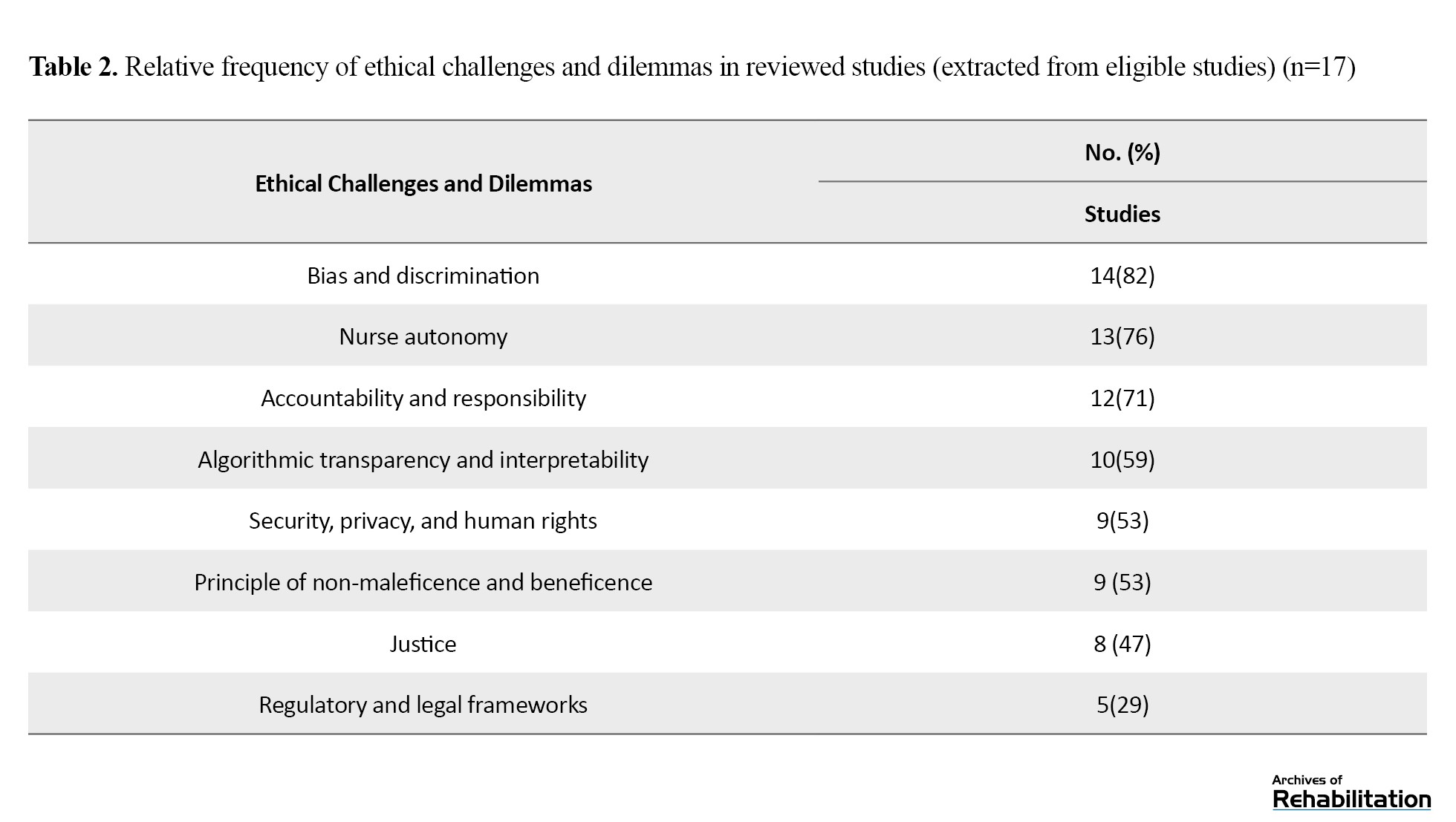

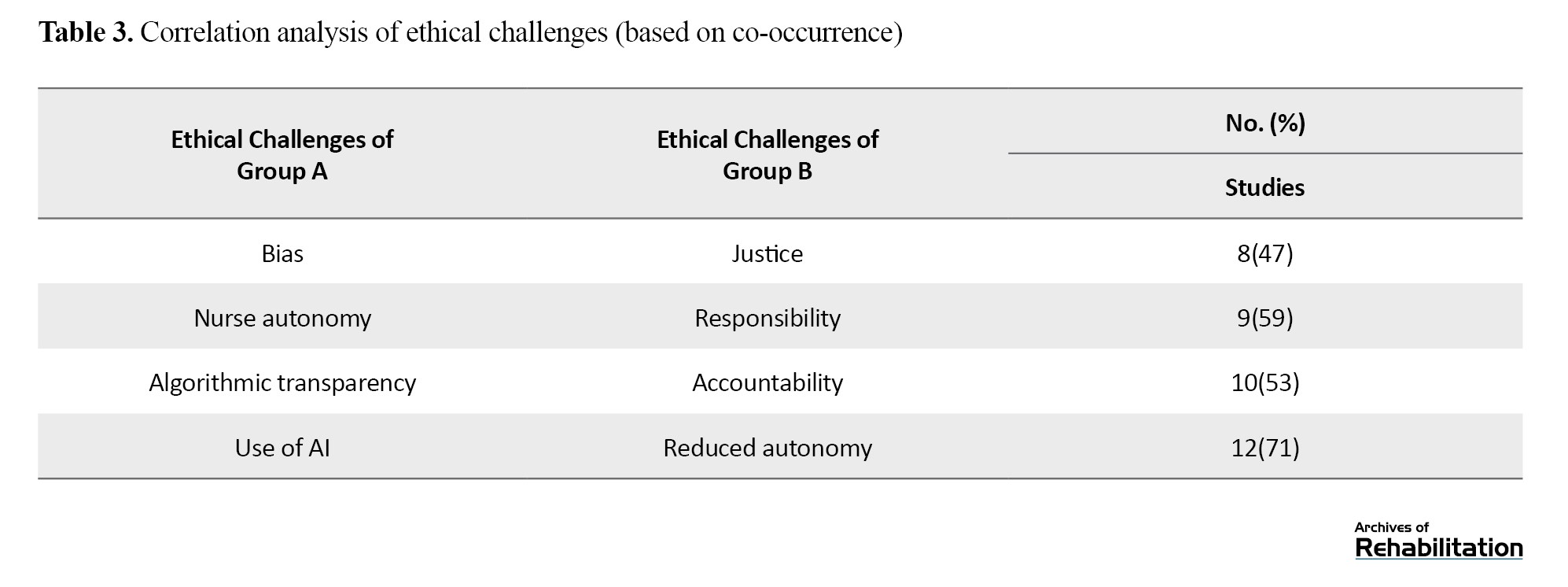

Furthermore, Table 2 presents the relative frequency of ethical challenges and dilemmas in the reviewed studies, and Table 3 provides a correlation analysis of ethical challenges (based on co-occurrence).

Result Reporting

After reviewing the abstracts and full texts of articles relevant to the research topic, the necessary information for writing the paper was extracted. For each study, the extracted information was the year of publication, research design, the author’s name, location, sampling method, data collection tools, and results. Finally, the information from the articles was organized and presented in the form of a comprehensive review article.

Results

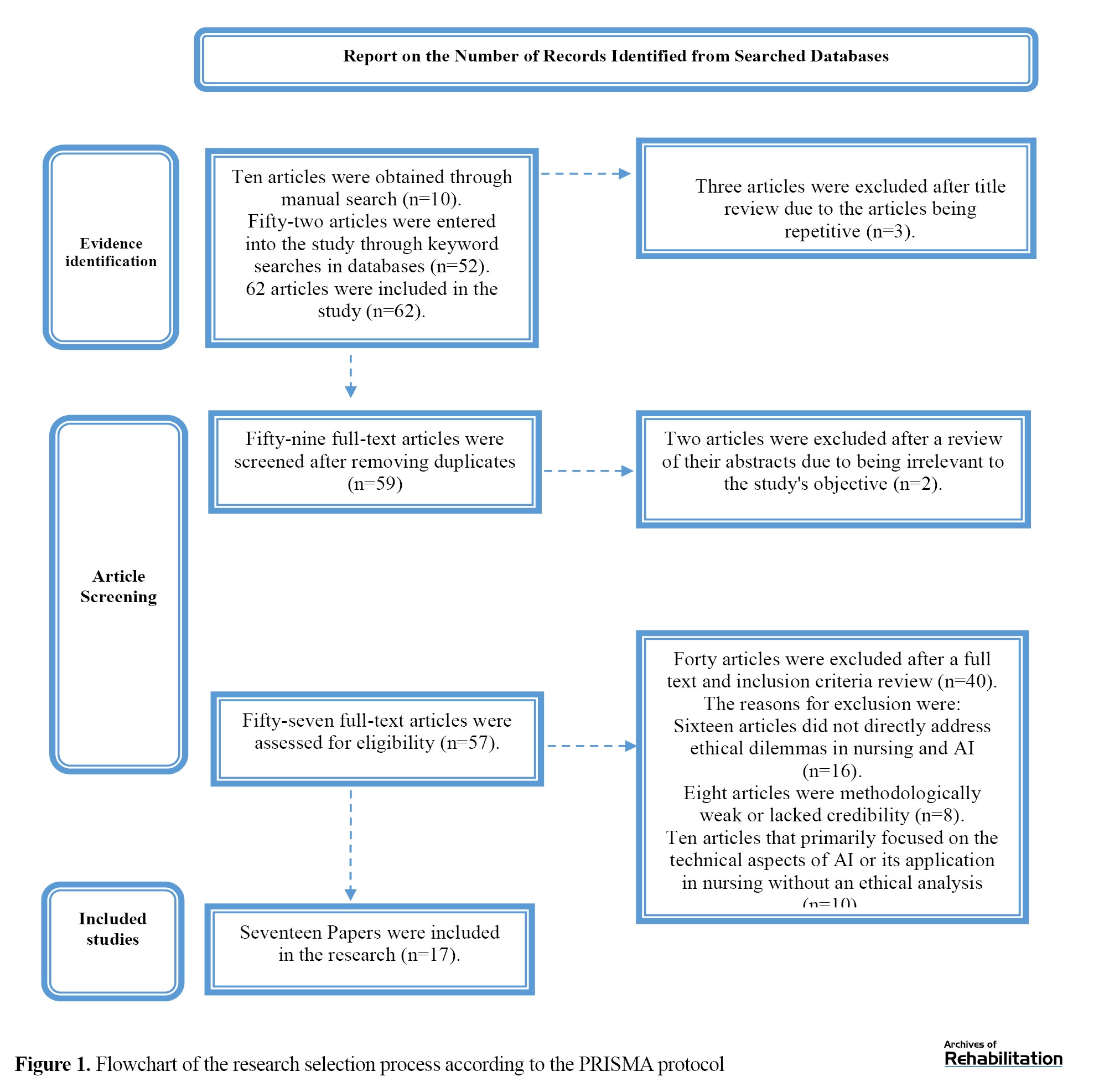

Sixty-two studies were obtained from the databases. Following a review of the titles and abstracts, 10 articles deemed irrelevant were excluded. From the remaining 52 articles, a comprehensive full-text review identified 17 studies that met the criteria for inclusion in the review (Figure 1). The selection process adhered to the PRISMA protocol, and the characteristics of the included papers are detailed in Table 1. The application of AI in healthcare presents several ethical challenges that need to be addressed. It can impact patient autonomy, highlighting the need to ensure patient participation in care-related decision-making processes. In practice, AI faces immediate challenges in its integration into clinical issues, including how to align with the process of obtaining informed consent. When using AI in healthcare, there is a need for safety, transparency, and clarity of guidelines and regulations. The application of AI in healthcare involves collecting sensitive and personal data, which must be protected from cyberattacks, and patient information privacy must be maintained. The application and development of AI in healthcare require timely accountability and transparency. Despite its numerous benefits, using AI in healthcare poses several limitations and ethical challenges that must be addressed, including patient data privacy, safety, and clarity of guidelines, informed consent, accountability, and the impact on patient autonomy. Establishing clear guidelines and regulations is crucial to ensuring AI development and implementation in an ethical and accountable manner. This issue is meticulously examined in Table 1.

Discussion

This study, through a comprehensive review of 17 relevant articles, delves into the ethical issues arising from using AI in nursing. Our findings clearly demonstrate that integrating AI into nursing care generates a series of ethical dilemmas for nurses, which can impact their decision-making and performance in critical clinical situations. These ambiguities and challenges are not merely theoretical but are inherent to the very nature of AI technologies and their application in complex healthcare environments.

The use of AI can create numerous ethical challenges and present nurses with a dilemma, creating hesitation and uncertainty in their choices and actions regarding ethical issues in care delivery. This issue is so crucial that multiple studies have been conducted in this area, analyzing these ethical issues. Recent advancements in AI within healthcare are accompanied by several ethical concerns. Challenges exist regarding bias, discrimination, lack of transparency, and logical or anomalous issues. A major challenge is the presence of bias in AI algorithms. These algorithms are trained on data that may carry historical, cultural, or social biases. Such biases can lead to discrimination in the delivery of care. For instance, AI recommendations may be less precise or even detrimental for certain patient groups, including ethnic minorities or individuals from lower socioeconomic backgrounds. Furthermore, various questions have arisen that attempt to address issues such as whether AI should be allowed to interact or intervene, and whether these systems should serve as supportive tools or decision-making agents. Although AI is frequently utilized in nursing care, its application must adhere to ethical standards.

Ultimately, the study’s findings indicate a sharp increase in publications related to AI and its ethical challenges since 2018. This trend may be attributed to the increasing application of AI in healthcare, an area that has received limited attention regarding its ethical challenges [29]. Recent research provides evidence that AI assists care and treatment teams in diagnostic processes, improves collaboration among healthcare staff, helps patients manage their illnesses [12, 30], and is more cost-effective. However, the development of any supportive technology typically requires careful consideration of ethical principles. The three ethical principles most frequently discussed in studies to date are non-maleficence, beneficence, and justice. Non-maleficence, in the context of AI use, raises the question of how to manage situations where clients suffer even the slightest harm due to an incorrect AI diagnosis. Issues related to beneficence and justice are also subject to criticism [12].

Compared to other technologies, AI poses a greater threat to the autonomy of nurses. Currently, AI assists healthcare providers with specific tasks; for instance, these systems are now capable of gathering information more quickly, better interpreting complex interdependencies, and extracting assumptions without subjective bias. For example, due to workload and fatigue among nurses, AI often yields better performance results, which serves as a warning sign. Hence, as these systems continue to outperform humans, the autonomy of healthcare professionals will undoubtedly be limited [13]. Since we are moving from a supportive technology toward independent decision-making, research on autonomy is not yet widespread but is becoming a crucial topic. While AI processes information about patients to obtain results, these outcomes may be difficult for humans to interpret, leading to a diminished mastery and insight from the workforce and an increased reliance on a purely machine-based approach [14].

Moreover, AI algorithms trained on sample datasets containing specific patient characteristics are at high risk of producing inaccurate results if the data are incomplete or incorrect. Thus, these inaccuracies may even be undetectable because of the complexity of the underlying algorithms. Additionally, the information used to train an algorithm is currently classified by humans and may, to some extent, contain subjective bias [16]. Autonomy, intentionality, and accountability in available AI systems or agents, like robots providing healthcare, present ethical challenges similar to those faced by nurses. Intentionality refers to an AI system’s capacity to act in ways that can be either ethically harmful or beneficial, with actions that are purposeful and calculated [17]. AI systems follow certain social norms and assume specific responsibilities. Nevertheless, issues surrounding the autonomy, intentionality, and accountability of these systems remain complex. When an AI system or agent fails to perform a task correctly, resulting in negative outcomes, it raises the question of who should be held responsible [18]. The question is particularly important when adverse consequences stem from programming errors, inaccurate input data, inadequate performance, or other factors. Consequently, accountability emerges as an ethical concern closely tied to the human elements involved in the design, implementation, deployment, and utilization of AI [19].

The primary objective of adhering to ethical principles in AI is to ensure that actions align with moral standards, which is quite challenging. One study indicates that designers, software engineers, and others involved in creating and implementing AI systems must adhere to human rights laws. Even a minor mistake in the application can result in a breach of human rights, presenting another ethical concern [20]. The social implications of ethical issues in AI are significant, as the replacement of healthcare personnel, particularly nurses, with automated AI systems and robots may disrupt employment in the field. This has faced many healthcare policymakers with fear. The principle of justice relates to non-discrimination and impartiality [21]. Racism and gender bias are common in many incidents, with most occurring in language or computer vision models, which should be a highly focused area for AI professionals during design and deployment. The principle of non-maleficence also states that there is a commitment to not inflict harm on others. This principle, which relates to safety, harm, security, and protection concerns, is the third most common topic in ethical guidelines [22]. AI technologies offer significant benefits for nursing, particularly in decision-making and task efficiency. However, these benefits must be balanced against ethical concerns, including protecting patient rights, algorithmic transparency, and mitigating bias. Current regulatory frameworks need to be adapted to meet the ethical needs of nursing [27].

Based on a review of the studies in Table 2, bias and discrimination received the most attention, being reported in 14 studies (82%). Following this, nurse autonomy was the second most frequently addressed topic, covered in 13 studies (76%), and the accountability and responsibility principle was also a focus in 12 studies (71%).

The algorithmic transparency and interpretability topic was addressed in 10 studies (59%). Security, privacy, and human rights, as well as non-maleficence and beneficence, were each reported in 9 studies (53%). Justice was identified in 8 studies (47%), and finally, regulatory and legal frameworks were the least frequent ethical challenge or dilemma, appearing in 5 studies (29%).

Conclusion

Our study’s findings demonstrate a substantial and growing number of ethical challenges and dilemmas related to using AI in nursing. While integrating AI into the nursing field holds promise for improving care delivery, it also raises serious ethical questions and uncertainties for nurses and the healthcare system that must not be overlooked. Our results clearly reveal that this technology is not only an auxiliary tool but rather a factor that can fundamentally influence the way nurses make decisions, interact with patients, and ultimately, provide high-quality care. Using AI in nursing, despite offering benefits, like assistance with diagnosis, improved collaboration, and cost-effectiveness, presents numerous ethical challenges and dilemmas for nurses. These challenges involve issues like bias and discrimination in algorithms, a lack of transparency in their performance, threats to nurses’ autonomy, ambiguities concerning accountability in the event of errors, and concerns related to traditional ethical principles, like beneficence, non-maleficence, and justice. Ethical challenges in the use of AI in nursing care are widespread, particularly concerning justice, autonomy, non-maleficence, beneficence, and accountability. Numerous studies highlight that the deployment of AI necessitates addressing complex ethical dilemmas. Future research should concentrate on thorough investigations and the creation of effective strategies to guarantee the ethical and responsible use of AI in healthcare.

Ethical Considerations

Compliance with ethical guidelines

All research ethics principles were observed in this study. Ethical approval was obtained from Ardabil University of Medical Sciences (Code: IR.ARUMS.REC.1404.114).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for profit sectors.

Authors' contributions

Conceptualization: Mozhdeh Taheri Afshar; Methodology: Mozhdeh Taheri Afshar and Mostafa Rajabzadeh; validation and supervision: Mohsen Poursadeghiyan; analysis and resources: Amir Hossein Nadi; investigation: Malihe Eshaqzadeh; initial draft preparation and visualization: Mostafa Rajabzadeh; editing & review: Mostafa Rajabzadeh and Mohsen Poursadeghiyan; Project management: Mohsen Poursadeghiyan and Malihe Eshaqzadeh.

Conflict of interest

The authors declared no conflict of interest.

References

Full-Text: (118 Views)

Introduction

With the advancement of artificial intelligence (AI) technology, AI can be employed in midwifery and nursing to improve decision-making, enhance patient care, and optimize healthcare system performance [1]. Consequently, using AI in these fields has been regarded as an effective and innovative approach to providing care services [2]. Furthermore, AI can be employed to assist nurses in making clinical decisions during intricate care scenarios or to carry out tasks like documentation [3]. The digitalization and technology development have enabled AI to facilitate better healthcare and nursing care provision. AI technologies are systems that exhibit intelligent behavior and can perform various tasks autonomously to achieve specific goals by analyzing their environment [4].

Although AI technologies have been utilized in nursing for a long time, they have not always been explicitly recognized as such. Nevertheless, recent research and discussions have increased attention on using AI in healthcare [5]. Despite growing research on digital technologies to improve nursing care quality, AI remains underutilized in this field. Recently, there has been an increase in research highlighting the potential for AI advancements in nursing care [6]. AI can enhance automated scheduling and planning, as well as streamline human processes, such as developing nursing staff schedules, making decisions related to care scheduling, and aiding nurses in executing tasks remotely without direct patient contact [7].

Moreover, AI has been introduced as a tool that can transform healthcare delivery and promote patient well-being. Consequently, as AI grows, this tool enters the healthcare system, and nurses must also keep pace with this development [8]. To date, few studies have delved into the practical relationship and application of AI systems tailored to certain nursing care requirements. AI can help provide high-quality, more efficient, and more equitable care, while also supporting less experienced professionals, for example, by helping to identify signs of rare diseases through extensive database searches. Another application of AI in nursing is nurse speech recognition technology, in which patient-related documents are quickly made available to the nurse with the help of terms and keywords provided to the AI [9, 10].

One of the applications of AI in nursing involves analyzing vast amounts of nursing notes to identify patients with a history of falls or substance and alcohol use disorders, assist in care planning, and assess patient risk. Nurses need to ensure that the integration of AI into healthcare systems addresses ethical concerns and aligns with fundamental nursing values, including compassionate and holistic care [11]. A major ethical challenge associated with AI usage is the emergence of ethical dilemmas. An ethical dilemma is a type of ethical conflict, indeed defined as any incompatibility between an individual’s motivations and desires, educational methods, values, and the fulfillment of moral duties and responsibilities [1]. Ethical conflict refers to the interference of moral values in situations requiring ethical action and a conflict between an individual’s internal and moral motivations [12].

The ethical challenges related to using AI in nursing care are as follows: A lack of human contact and interaction, limitations in interpreting patient needs, AI’s limited ability to provide physical and bodily care, limited capacity to handle complex situations, like critical thinking and decision-making skills, dependence on data quality, ethical issues associated with patient privacy and data security, and the impact on patient autonomy, safety and transparency in instructions, informed consent, privacy, accountability, and transparency [13, 14]. Additionally, AI can have unintended consequences in healthcare provision. Although AI has the potential to enhance nursing practice, the ethical responsibilities of nurses in its application will become increasingly important. To identify and navigate ethical dilemmas, nurses require a precise understanding of these ethical challenges to manage the challenging situations that arise. However, while we will explore four key ethical concepts in nursing—accountability, advocacy, collaboration, and care—these do not represent the entire list of relevant ethical concepts in nursing [15]. In fact, by upholding core nursing ethical values, such as meticulous nursing care devoid of moral ambiguity, this technology can be used appropriately. Accordingly, this study examined the ethical dilemmas of AI in nursing [16].

Materials and Methods

This study is a systematic review. The steps undertaken are as follows:

Search method

Search and extraction of relevant studies were conducted independently by two researchers based on the research objectives and the preferred reporting items for systematic reviews and meta-analyses (PRISMA) checklist. A thorough search for articles was conducted from January 2018 to April 2025 across several databases, including Medline, PubMed, Association for Computing Machinery (ACM) Digital Library, Scopus, Web of Science, as well as the Scientific Information Database (SID) and Magiran search engines. Moreover, the search employed a Medical Subject Headings (MeSH) strategy and the keywords “ethics,” “artificial intelligence,” and “nursing,” in both Persian and English, utilizing Boolean operators. To increase the validity of the study’s findings and minimize data entry bias, the article search and evaluation process was conducted meticulously. Articles selected for final evaluation were independently assessed by the two authors, after obtaining full-text access, to verify exclusion and inclusion criteria. Articles meeting the inclusion criteria were analyzed. This process was supervised by a third party.

Exclusion and Inclusion Criteria

Inclusion crieria: Research studies, systematic reviews, analytical articles, and case reports that focused on AI technologies and their application in nursing (particularly concerning ethical dilemmas) and provided relevant empirical data and evidence; articles published in either Persian or English; full-text articles available; and articles published from 2018 to 2025 to make sure of including the most recent data and findings.

Exclusion criteria: Articles that did not directly address ethical dilemmas in nursing and AI; those that were methodologically weak, lacked credibility, or provided unreliable results; articles focusing primarily on the technical aspects of AI or its application in nursing without ethical analysis; and articles written in languages other than Persian or English.

Article Quality Evaluation

Initially, the PRISMA checklist assessed the credibility of the articles. The quality evaluation was done independently by two researchers. In case of disagreement between the researchers, a third observer reviewed the articles, and their opinions were applied as the final decision. To collect data from the final selected articles, a data extraction and classification form was used, which included the author’s name, title, country, year, and results. The data were then condensed, organized, compared, and summarized. In this study, the results of the articles were interpreted, and, whenever possible, the original sources cited by the authors were consulted.

Article Extraction Based on Inclusion Criteria

Using the keywords mentioned above, 62 papers were retrieved at the end of the search: 20 articles from Persian databases and 42 from international databases. These studies were then screened based on the inclusion criteria. The selection process began by compiling a list of abstracts and titles of all articles found in the databases. After a careful review of the titles and abstracts, many studies were excluded because of irrelevance to the study’s objective. If a decision could not be made based on reading the abstract and title, the full text of the article was reviewed. Subsequently, relevant articles were independently selected for inclusion in the research process, and finally, 17 articles were included in the study (Figure 1).

With the advancement of artificial intelligence (AI) technology, AI can be employed in midwifery and nursing to improve decision-making, enhance patient care, and optimize healthcare system performance [1]. Consequently, using AI in these fields has been regarded as an effective and innovative approach to providing care services [2]. Furthermore, AI can be employed to assist nurses in making clinical decisions during intricate care scenarios or to carry out tasks like documentation [3]. The digitalization and technology development have enabled AI to facilitate better healthcare and nursing care provision. AI technologies are systems that exhibit intelligent behavior and can perform various tasks autonomously to achieve specific goals by analyzing their environment [4].

Although AI technologies have been utilized in nursing for a long time, they have not always been explicitly recognized as such. Nevertheless, recent research and discussions have increased attention on using AI in healthcare [5]. Despite growing research on digital technologies to improve nursing care quality, AI remains underutilized in this field. Recently, there has been an increase in research highlighting the potential for AI advancements in nursing care [6]. AI can enhance automated scheduling and planning, as well as streamline human processes, such as developing nursing staff schedules, making decisions related to care scheduling, and aiding nurses in executing tasks remotely without direct patient contact [7].

Moreover, AI has been introduced as a tool that can transform healthcare delivery and promote patient well-being. Consequently, as AI grows, this tool enters the healthcare system, and nurses must also keep pace with this development [8]. To date, few studies have delved into the practical relationship and application of AI systems tailored to certain nursing care requirements. AI can help provide high-quality, more efficient, and more equitable care, while also supporting less experienced professionals, for example, by helping to identify signs of rare diseases through extensive database searches. Another application of AI in nursing is nurse speech recognition technology, in which patient-related documents are quickly made available to the nurse with the help of terms and keywords provided to the AI [9, 10].

One of the applications of AI in nursing involves analyzing vast amounts of nursing notes to identify patients with a history of falls or substance and alcohol use disorders, assist in care planning, and assess patient risk. Nurses need to ensure that the integration of AI into healthcare systems addresses ethical concerns and aligns with fundamental nursing values, including compassionate and holistic care [11]. A major ethical challenge associated with AI usage is the emergence of ethical dilemmas. An ethical dilemma is a type of ethical conflict, indeed defined as any incompatibility between an individual’s motivations and desires, educational methods, values, and the fulfillment of moral duties and responsibilities [1]. Ethical conflict refers to the interference of moral values in situations requiring ethical action and a conflict between an individual’s internal and moral motivations [12].

The ethical challenges related to using AI in nursing care are as follows: A lack of human contact and interaction, limitations in interpreting patient needs, AI’s limited ability to provide physical and bodily care, limited capacity to handle complex situations, like critical thinking and decision-making skills, dependence on data quality, ethical issues associated with patient privacy and data security, and the impact on patient autonomy, safety and transparency in instructions, informed consent, privacy, accountability, and transparency [13, 14]. Additionally, AI can have unintended consequences in healthcare provision. Although AI has the potential to enhance nursing practice, the ethical responsibilities of nurses in its application will become increasingly important. To identify and navigate ethical dilemmas, nurses require a precise understanding of these ethical challenges to manage the challenging situations that arise. However, while we will explore four key ethical concepts in nursing—accountability, advocacy, collaboration, and care—these do not represent the entire list of relevant ethical concepts in nursing [15]. In fact, by upholding core nursing ethical values, such as meticulous nursing care devoid of moral ambiguity, this technology can be used appropriately. Accordingly, this study examined the ethical dilemmas of AI in nursing [16].

Materials and Methods

This study is a systematic review. The steps undertaken are as follows:

Search method

Search and extraction of relevant studies were conducted independently by two researchers based on the research objectives and the preferred reporting items for systematic reviews and meta-analyses (PRISMA) checklist. A thorough search for articles was conducted from January 2018 to April 2025 across several databases, including Medline, PubMed, Association for Computing Machinery (ACM) Digital Library, Scopus, Web of Science, as well as the Scientific Information Database (SID) and Magiran search engines. Moreover, the search employed a Medical Subject Headings (MeSH) strategy and the keywords “ethics,” “artificial intelligence,” and “nursing,” in both Persian and English, utilizing Boolean operators. To increase the validity of the study’s findings and minimize data entry bias, the article search and evaluation process was conducted meticulously. Articles selected for final evaluation were independently assessed by the two authors, after obtaining full-text access, to verify exclusion and inclusion criteria. Articles meeting the inclusion criteria were analyzed. This process was supervised by a third party.

Exclusion and Inclusion Criteria

Inclusion crieria: Research studies, systematic reviews, analytical articles, and case reports that focused on AI technologies and their application in nursing (particularly concerning ethical dilemmas) and provided relevant empirical data and evidence; articles published in either Persian or English; full-text articles available; and articles published from 2018 to 2025 to make sure of including the most recent data and findings.

Exclusion criteria: Articles that did not directly address ethical dilemmas in nursing and AI; those that were methodologically weak, lacked credibility, or provided unreliable results; articles focusing primarily on the technical aspects of AI or its application in nursing without ethical analysis; and articles written in languages other than Persian or English.

Article Quality Evaluation

Initially, the PRISMA checklist assessed the credibility of the articles. The quality evaluation was done independently by two researchers. In case of disagreement between the researchers, a third observer reviewed the articles, and their opinions were applied as the final decision. To collect data from the final selected articles, a data extraction and classification form was used, which included the author’s name, title, country, year, and results. The data were then condensed, organized, compared, and summarized. In this study, the results of the articles were interpreted, and, whenever possible, the original sources cited by the authors were consulted.

Article Extraction Based on Inclusion Criteria

Using the keywords mentioned above, 62 papers were retrieved at the end of the search: 20 articles from Persian databases and 42 from international databases. These studies were then screened based on the inclusion criteria. The selection process began by compiling a list of abstracts and titles of all articles found in the databases. After a careful review of the titles and abstracts, many studies were excluded because of irrelevance to the study’s objective. If a decision could not be made based on reading the abstract and title, the full text of the article was reviewed. Subsequently, relevant articles were independently selected for inclusion in the research process, and finally, 17 articles were included in the study (Figure 1).

Tabulation and Summarization of Information and Data

In this stage, after data extraction, all study data—including the first author’s name, location, title, year of publication, and findings—were summarized in Table 1.

Furthermore, Table 2 presents the relative frequency of ethical challenges and dilemmas in the reviewed studies, and Table 3 provides a correlation analysis of ethical challenges (based on co-occurrence).

Result Reporting

After reviewing the abstracts and full texts of articles relevant to the research topic, the necessary information for writing the paper was extracted. For each study, the extracted information was the year of publication, research design, the author’s name, location, sampling method, data collection tools, and results. Finally, the information from the articles was organized and presented in the form of a comprehensive review article.

Results

Sixty-two studies were obtained from the databases. Following a review of the titles and abstracts, 10 articles deemed irrelevant were excluded. From the remaining 52 articles, a comprehensive full-text review identified 17 studies that met the criteria for inclusion in the review (Figure 1). The selection process adhered to the PRISMA protocol, and the characteristics of the included papers are detailed in Table 1. The application of AI in healthcare presents several ethical challenges that need to be addressed. It can impact patient autonomy, highlighting the need to ensure patient participation in care-related decision-making processes. In practice, AI faces immediate challenges in its integration into clinical issues, including how to align with the process of obtaining informed consent. When using AI in healthcare, there is a need for safety, transparency, and clarity of guidelines and regulations. The application of AI in healthcare involves collecting sensitive and personal data, which must be protected from cyberattacks, and patient information privacy must be maintained. The application and development of AI in healthcare require timely accountability and transparency. Despite its numerous benefits, using AI in healthcare poses several limitations and ethical challenges that must be addressed, including patient data privacy, safety, and clarity of guidelines, informed consent, accountability, and the impact on patient autonomy. Establishing clear guidelines and regulations is crucial to ensuring AI development and implementation in an ethical and accountable manner. This issue is meticulously examined in Table 1.

Discussion

This study, through a comprehensive review of 17 relevant articles, delves into the ethical issues arising from using AI in nursing. Our findings clearly demonstrate that integrating AI into nursing care generates a series of ethical dilemmas for nurses, which can impact their decision-making and performance in critical clinical situations. These ambiguities and challenges are not merely theoretical but are inherent to the very nature of AI technologies and their application in complex healthcare environments.

The use of AI can create numerous ethical challenges and present nurses with a dilemma, creating hesitation and uncertainty in their choices and actions regarding ethical issues in care delivery. This issue is so crucial that multiple studies have been conducted in this area, analyzing these ethical issues. Recent advancements in AI within healthcare are accompanied by several ethical concerns. Challenges exist regarding bias, discrimination, lack of transparency, and logical or anomalous issues. A major challenge is the presence of bias in AI algorithms. These algorithms are trained on data that may carry historical, cultural, or social biases. Such biases can lead to discrimination in the delivery of care. For instance, AI recommendations may be less precise or even detrimental for certain patient groups, including ethnic minorities or individuals from lower socioeconomic backgrounds. Furthermore, various questions have arisen that attempt to address issues such as whether AI should be allowed to interact or intervene, and whether these systems should serve as supportive tools or decision-making agents. Although AI is frequently utilized in nursing care, its application must adhere to ethical standards.

Ultimately, the study’s findings indicate a sharp increase in publications related to AI and its ethical challenges since 2018. This trend may be attributed to the increasing application of AI in healthcare, an area that has received limited attention regarding its ethical challenges [29]. Recent research provides evidence that AI assists care and treatment teams in diagnostic processes, improves collaboration among healthcare staff, helps patients manage their illnesses [12, 30], and is more cost-effective. However, the development of any supportive technology typically requires careful consideration of ethical principles. The three ethical principles most frequently discussed in studies to date are non-maleficence, beneficence, and justice. Non-maleficence, in the context of AI use, raises the question of how to manage situations where clients suffer even the slightest harm due to an incorrect AI diagnosis. Issues related to beneficence and justice are also subject to criticism [12].

Compared to other technologies, AI poses a greater threat to the autonomy of nurses. Currently, AI assists healthcare providers with specific tasks; for instance, these systems are now capable of gathering information more quickly, better interpreting complex interdependencies, and extracting assumptions without subjective bias. For example, due to workload and fatigue among nurses, AI often yields better performance results, which serves as a warning sign. Hence, as these systems continue to outperform humans, the autonomy of healthcare professionals will undoubtedly be limited [13]. Since we are moving from a supportive technology toward independent decision-making, research on autonomy is not yet widespread but is becoming a crucial topic. While AI processes information about patients to obtain results, these outcomes may be difficult for humans to interpret, leading to a diminished mastery and insight from the workforce and an increased reliance on a purely machine-based approach [14].

Moreover, AI algorithms trained on sample datasets containing specific patient characteristics are at high risk of producing inaccurate results if the data are incomplete or incorrect. Thus, these inaccuracies may even be undetectable because of the complexity of the underlying algorithms. Additionally, the information used to train an algorithm is currently classified by humans and may, to some extent, contain subjective bias [16]. Autonomy, intentionality, and accountability in available AI systems or agents, like robots providing healthcare, present ethical challenges similar to those faced by nurses. Intentionality refers to an AI system’s capacity to act in ways that can be either ethically harmful or beneficial, with actions that are purposeful and calculated [17]. AI systems follow certain social norms and assume specific responsibilities. Nevertheless, issues surrounding the autonomy, intentionality, and accountability of these systems remain complex. When an AI system or agent fails to perform a task correctly, resulting in negative outcomes, it raises the question of who should be held responsible [18]. The question is particularly important when adverse consequences stem from programming errors, inaccurate input data, inadequate performance, or other factors. Consequently, accountability emerges as an ethical concern closely tied to the human elements involved in the design, implementation, deployment, and utilization of AI [19].

The primary objective of adhering to ethical principles in AI is to ensure that actions align with moral standards, which is quite challenging. One study indicates that designers, software engineers, and others involved in creating and implementing AI systems must adhere to human rights laws. Even a minor mistake in the application can result in a breach of human rights, presenting another ethical concern [20]. The social implications of ethical issues in AI are significant, as the replacement of healthcare personnel, particularly nurses, with automated AI systems and robots may disrupt employment in the field. This has faced many healthcare policymakers with fear. The principle of justice relates to non-discrimination and impartiality [21]. Racism and gender bias are common in many incidents, with most occurring in language or computer vision models, which should be a highly focused area for AI professionals during design and deployment. The principle of non-maleficence also states that there is a commitment to not inflict harm on others. This principle, which relates to safety, harm, security, and protection concerns, is the third most common topic in ethical guidelines [22]. AI technologies offer significant benefits for nursing, particularly in decision-making and task efficiency. However, these benefits must be balanced against ethical concerns, including protecting patient rights, algorithmic transparency, and mitigating bias. Current regulatory frameworks need to be adapted to meet the ethical needs of nursing [27].

Based on a review of the studies in Table 2, bias and discrimination received the most attention, being reported in 14 studies (82%). Following this, nurse autonomy was the second most frequently addressed topic, covered in 13 studies (76%), and the accountability and responsibility principle was also a focus in 12 studies (71%).

The algorithmic transparency and interpretability topic was addressed in 10 studies (59%). Security, privacy, and human rights, as well as non-maleficence and beneficence, were each reported in 9 studies (53%). Justice was identified in 8 studies (47%), and finally, regulatory and legal frameworks were the least frequent ethical challenge or dilemma, appearing in 5 studies (29%).

Conclusion

Our study’s findings demonstrate a substantial and growing number of ethical challenges and dilemmas related to using AI in nursing. While integrating AI into the nursing field holds promise for improving care delivery, it also raises serious ethical questions and uncertainties for nurses and the healthcare system that must not be overlooked. Our results clearly reveal that this technology is not only an auxiliary tool but rather a factor that can fundamentally influence the way nurses make decisions, interact with patients, and ultimately, provide high-quality care. Using AI in nursing, despite offering benefits, like assistance with diagnosis, improved collaboration, and cost-effectiveness, presents numerous ethical challenges and dilemmas for nurses. These challenges involve issues like bias and discrimination in algorithms, a lack of transparency in their performance, threats to nurses’ autonomy, ambiguities concerning accountability in the event of errors, and concerns related to traditional ethical principles, like beneficence, non-maleficence, and justice. Ethical challenges in the use of AI in nursing care are widespread, particularly concerning justice, autonomy, non-maleficence, beneficence, and accountability. Numerous studies highlight that the deployment of AI necessitates addressing complex ethical dilemmas. Future research should concentrate on thorough investigations and the creation of effective strategies to guarantee the ethical and responsible use of AI in healthcare.

Ethical Considerations

Compliance with ethical guidelines

All research ethics principles were observed in this study. Ethical approval was obtained from Ardabil University of Medical Sciences (Code: IR.ARUMS.REC.1404.114).

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for profit sectors.

Authors' contributions

Conceptualization: Mozhdeh Taheri Afshar; Methodology: Mozhdeh Taheri Afshar and Mostafa Rajabzadeh; validation and supervision: Mohsen Poursadeghiyan; analysis and resources: Amir Hossein Nadi; investigation: Malihe Eshaqzadeh; initial draft preparation and visualization: Mostafa Rajabzadeh; editing & review: Mostafa Rajabzadeh and Mohsen Poursadeghiyan; Project management: Mohsen Poursadeghiyan and Malihe Eshaqzadeh.

Conflict of interest

The authors declared no conflict of interest.

References

- Seibert K, Domhoff D, Bruch D, Schulte-Althoff M, Fürstenau D, Biessmann F, et al. Application scenarios for artificial intelligence in nursing care: Rapid review. Journal of Medical Internet Research. 2021; 23(11):e26522. [DOI:10.2196/26522] [PMID]

- Esmali R, Akhlaghi Pirposhteh E, Askari A, Poursadeghiyan M. An overview of the applications and conditions for using artificial intelligence and digitalization in occupational health and safety in the workplace. Journal of Health and Safety at Work. 2025; 15(3):724-46. [Link]

- Ronquillo CE, Peltonen LM, Pruinelli L, Chu CH, Bakken S, Beduschi A, et al. Artificial intelligence in nursing: Priorities and opportunities from an international invitational think-tank of the Nursing and artificial intelligence leadership collaborative. Journal of Advanced Nursing. 2021; 77(9):3707-17. [DOI:10.1111/jan.14855] [PMID]

- De Gagne JC. The state of artificial intelligence in nursing education: past, present, and future directions. International Journal of Environmental Research and Public Health. 2023; 20(6):4884. [DOI:10.3390/ijerph20064884] [PMID]

- Ross A, Freeman R, McGrow K, Kagan O. Implications of artificial intelligence for nurse managers. Nursing Management. 2024; 55(7):14-23. [DOI:10.1097/nmg.0000000000000143] [PMID]

- Buchanan C, Howitt ML, Wilson R, Booth RG, Risling T, Bamford M. Predicted influences of artificial intelligence on nursing education: Scoping review. JMIR Nursing. 2021; 4(1):e23933. [DOI:10.2196/23933] [PMID]

- McGreevey JD 3rd, Hanson CW 3rd, Koppel R. Clinical, legal, and ethical aspects of artificial intelligence-assisted conversational agents in health care. JAMA. 2020; 324(6):552-3. [DOI:10.1001/jama.2020.2724] [PMID]

- Naik N, Hameed BMZ, Shetty DK, Swain D, Shah M, Paul R, et al. Legal and ethical consideration in artificial intelligence in healthcare: Who takes responsibility? Frontiers in Surgery. 2022; 9:862322. [DOI:10.3389/fsurg.2022.862322] [PMID]

- Arnold MH. Teasing out artificial intelligence in medicine: an ethical critique of artificial intelligence and machine learning in medicine. Journal of Bioethical Inquiry. 2021; 18(1):121-39. [DOI:10.1007/s11673-020-10080-1] [PMID]

- Tam W, Huynh T, Tang A, Luong S, Khatri Y, Zhou W. Nursing education in the age of artificial intelligence powered Chatbots (AI-Chatbots): Are we ready yet? Nurse Education Today. 2023; 129:105917. [DOI:10.1016/j.nedt.2023.105917] [PMID]

- Belk R. Ethical issues in service robotics and artificial intelligence. The Service Industries Journal. 2020; 41:1-17. [DOI:10.1080/02642069.2020.1727892]

- Karimian G, Petelos E, Evers S. The ethical issues of the application of artificial intelligence in healthcare: a systematic scoping review. AI and Ethics. 2022; 2:1-13. [DOI:10.1007/s43681-021-00131-7]

- Rubeis G. The disruptive power of Artificial Intelligence. Ethical aspects of gerontechnology in elderly care. Archives of Gerontology and Geriatrics. 2020; 91:104186. [DOI:10.1016/j.archger.2020.104186] [PMID]

- Saheb T, Saheb T, Carpenter DO. Mapping research strands of ethics of artificial intelligence in healthcare: A bibliometric and content analysis. Computers in Biology and Medicine. 2021; 135:104660. [DOI:10.1016/j.compbiomed.2021.104660] [PMID]

- Ibuki T, Ibuki A, Nakazawa E. Possibilities and ethical issues of entrusting nursing tasks to robots and artificial intelligence. Nursing Ethics. 2024; 31(6):1010-20. [DOI:10.1177/09697330221149094] [PMID]

- Ashok M, Madan R, Joha A, Sivarajah U. Ethical framework for Artificial Intelligence and Digital technologies. International Journal of Information Management. 2022; 62:102433. [DOI:10.1016/j.ijinfomgt.2021.102433]

- Baum SD. Social choice ethics in artificial intelligence. Ai & Society. 2020; 35(1):165-76. [DOI:10.1007/s00146-017-0760-1]

- Brendel AB, Mirbabaie M, Lembcke TB, Hofeditz L. Ethical management of artificial intelligence. Sustainability. 2021; 13(4):1974. [DOI:10.3390/su13041974]

- Ma L, Zhang Z, Zhang N. Ethical dilemma of artificial intelligence and its research progress. IOP Conference Series: Materials Science and Engineering. 2018; 392:062188. [DOI:10.1088/1757-899X/392/6/062188]

- Jabotinsky HY, Sarel R. Co-authoring with an AI? Ethical dilemmas and artificial intelligence. Arizona State Law Journal, Forthcoming. 2024; 56:187. [DOI:10.2139/ssrn.4303959]

- Denning PJ, Denning DE. Dilemmas of artificial intelligence. Communications of the ACM. 2020; 63(3):22-4. [DOI:10.1145/3379920]

- Strümke I, Slavkovik M, Madai VI. The social dilemma in artificial intelligence development and why we have to solve it. AI and Ethics. 2022; 2(4):655-65. [DOI:10.1007/s43681-021-00120-w]

- Abdolahi Shahvali E, Arizavi Z, Hematipour ه, Jahangirimehr A. Investigating the level of knowledge, attitude and performance students regarding the applications of artificial intelligence in nursing. Jundishapur Scientific Medical Journal. 2024; 23(2):134-42. [DOI:10.32592/JSMJ.23.2.134]

- Eskandari S. Ethical challenges of using artificial intelligence in article writing. Education and Ethics In Nursing ISSN. 2024; 13(1-2):4-6. [DOI:10.22034/ethic.2024.2029584.1051]

- Rony MKK, Akter K, Debnath M, Rahman M, Johra F, Akter F, et al. Strengths, weaknesses, opportunities and threats (SWOT) analysis of artificial intelligence adoption in nursing care. Journal of Medicine, Surgery, and Public Health. 2024; 3:100113. [DOI:10.1016/j.glmedi.2024.100113]

- Sisk BA, Antes AL, DuBois JM. An overarching framework for the ethics of artificial intelligence in pediatrics. JAMA Pediatrics. 2024; 178(3):213-4. [DOI:10.1001/jamapediatrics.2023.5761] [PMID]

- Mohammed SAAQ, Osman YMM, Ibrahim AM, Shaban M. Ethical and regulatory considerations in the use of AI and machine learning in nursing: A systematic review. International Nursing Review. 2025; 72(1):e70010. [DOI:10.1111/inr.70010] [PMID]

- Badawy W, Zinhom H, Shaban M. Navigating ethical considerations in the use of artificial intelligence for patient care: A systematic review. International Nursing Review. 2025; 72(3):e13059. [DOI:10.1111/inr.13059] [PMID]

- Huang C, Zhang Z, Mao B, Yao X. An overview of artificial intelligence ethics. IEEE Transactions on Artificial Intelligence. 2022; 4:799-819. [DOI:10.1109/TAI.2022.3194503]

- Stock-Homburg R, Kegel M. Ethical Considerations in Customer-Robot Service Interactions: Scoping review, network analysis, and future research Agenda. International Journal of Social Robotics. 2025; 17:1129-59. [DOI:10.1007/s12369-025-01239-0]

Type of Study: Systematic Review |

Subject:

Nursing

Received: 25/05/2025 | Accepted: 31/08/2025 | Published: 1/01/2026

Received: 25/05/2025 | Accepted: 31/08/2025 | Published: 1/01/2026

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |