Volume 24, Issue 4 (Winter 2024)

jrehab 2024, 24(4): 566-585 |

Back to browse issues page

Download citation:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

BibTeX | RIS | EndNote | Medlars | ProCite | Reference Manager | RefWorks

Send citation to:

Soleimani F, Hasani Khiabani N, Yazdi L, Lornejad H, Aboulghasemi N, Shariatpanahi G. Designing and Assessing the Validity and Reliability of the Bayley-III Test Examiner Clinical Performance Scale. jrehab 2024; 24 (4) :566-585

URL: http://rehabilitationj.uswr.ac.ir/article-1-3228-en.html

URL: http://rehabilitationj.uswr.ac.ir/article-1-3228-en.html

Farin Soleimani1

, Nahideh Hasani Khiabani *2

, Nahideh Hasani Khiabani *2

, Leila Yazdi3

, Leila Yazdi3

, Hamidreza Lornejad4

, Hamidreza Lornejad4

, Naria Aboulghasemi4

, Naria Aboulghasemi4

, Ghazal Shariatpanahi5

, Ghazal Shariatpanahi5

, Nahideh Hasani Khiabani *2

, Nahideh Hasani Khiabani *2

, Leila Yazdi3

, Leila Yazdi3

, Hamidreza Lornejad4

, Hamidreza Lornejad4

, Naria Aboulghasemi4

, Naria Aboulghasemi4

, Ghazal Shariatpanahi5

, Ghazal Shariatpanahi5

1- Pediatric Neurorehabilitation Research Center, University of Social Welfare and Rehabilitation Sciences, Tehran, Iran., Kodakyar St, Daneshjo Blvd, Evin, Tehran, Iran, Post code: 1988713831 Telfax: 009821-71732846

2- Children Development Section, Zahra Mardani Azar Children's Hospital, Tabriz University of Medical Sciences, Tabriz, Iran. ,nahid_md2000@yahoo.com

3- Homayoun Evolution Center, Shahid Beheshti University of Medical Sciences, Tehran, Iran., No. 243 , Safaee Ave., Janbazan St, Sabalan. SQ , Post code: 1635736811

4- Child Health Office, Ministry of Health and Medical Education, Tehran, Iran., Child Health Office- Ministry of Health and Medical Education

5- Department of Pediatric Infectious Disease, Bahrami Hospital, Tehran University of Medical Sciences, Tehran, Iran., Unit 16, number 12, 20th street, North Allameh, Saadat Abad , Postal Code: 1997977441

2- Children Development Section, Zahra Mardani Azar Children's Hospital, Tabriz University of Medical Sciences, Tabriz, Iran. ,

3- Homayoun Evolution Center, Shahid Beheshti University of Medical Sciences, Tehran, Iran., No. 243 , Safaee Ave., Janbazan St, Sabalan. SQ , Post code: 1635736811

4- Child Health Office, Ministry of Health and Medical Education, Tehran, Iran., Child Health Office- Ministry of Health and Medical Education

5- Department of Pediatric Infectious Disease, Bahrami Hospital, Tehran University of Medical Sciences, Tehran, Iran., Unit 16, number 12, 20th street, North Allameh, Saadat Abad , Postal Code: 1997977441

Full-Text [PDF 2226 kb]

(1126 Downloads)

| Abstract (HTML) (7286 Views)

Full-Text: (2086 Views)

Introduction

The value of early diagnosis of developmental disorders and providing intervention services for infants and toddlers has gained increasing significance. Timely and periodic evaluation of development offers the possibility of early diagnosis and treatment and prevents the loss of developmental potential of the child [7، 8]. The reason for increasing efforts in diagnosis at an early age is the cost-effectiveness and higher impact of intervention programs at this stage [9]. The early interventions improve children’s developmental prognosis [10] with short- and long-term positive effects [11، 12]. Currently, most specialists monitor a child’s development based on their clinical judgment, but several studies have shown that clinical judgment alone is ineffective in diagnosing developmental delays [9، 13، 14]. The evidence indicates that, on average, only 30% of children with developmental or behavioral problems are diagnosed by a doctor without a screening tool [11، 15، 16]. For this purpose, many developmental screening tests have been designed and provided to children’s caregivers. Diagnostic tests of developmental disorders should be used to definitively diagnose the cases referred to as developmental delay in the screening tests.

Compared to the several screening tests, such as the ages and stages questionnaires (ASQ-3) and the Denver developmental screener test (Denver-II), diagnostic tests for developmental disorders are limited and, in most cases, only diagnose developmental disorders in one area. These tests include Battelle developmental inventory screener (BDI-2), MacArthur-Bates short-forms (SFI and SFII) tests, and World Health Organization (WHO) motor milestones.

The “Bayley developmental scales” diagnostic test is one of the few globally valid diagnostic tests that, in addition to being comprehensive in all developmental areas, has high psychometric indicators. This test is an individual evaluation tool that assesses the developmental performance of children aged 1 to 42 months in 3 developmental domains: Cognitive, language, and motor. The evaluation of these three areas is done objectively by the examiner.

Therefore, caregivers in Iran’s health system have chosen and implemented Bayley’s test to diagnose and evaluate developmental disorders in children. To do this screening, first, the instructors train examiners to perform the test in a theoretical and practical training course. After completing the training course, the testers should practically implement the test in healthcare centers to acquire the necessary skills. They should send three videos of the test done on a healthy child to be evaluated by the instructors and get a certificate of skill in performing the test. Because there is no tool to assess the competence in conducting the test inside and outside the country, there have always been challenges in accepting or rejecting the clinical qualification of these testers by different trainers.

Therefore, the current research was conducted at the request of the Ministry of Health and Medical Education to design a standard instrument with acceptable validity and reliability to evaluate the clinical performance of Bayley test examiners.

Materials and Methods

This study was carried out in two parts: Building the tool and determining its validity and reliability in six steps. These steps are as follows: Conducting a review of the literature, explaining the concepts of clinical practice according to the results of the review of the literature and the opinions of experts, compiling the initial draft and the final items of the tool, adjusting the scoring structure and its interpretation, determining the face validity of the items by calculating the impact score, determining the quantitative content validity of the items by calculating the relative content validity ratio (CVR) and the content validity index (CVI).

The target population was the examiners of the Bayley test, and the statistical population was the trainers or evaluators. The inclusion criteria included national instructors and examiners with 5 years of experience in conducting the test. The exclusion criterion was an unwillingness to participate in the study. A purposive sampling was used to select the participants. The sample comprised 10 people for calculating face and content validity and 8 for internal consistency, inter-rater reliability, and test re-test.

After a focused group discussion with a team of experts, collecting their opinions, and reviewing the literature, we concluded that this concept includes two parts: Specific clinical performance (the performance of the examiner in implementing the test items according to the instructions in three cognitive, linguistic, and motor scales) and general clinical performance (examiner’s performance in complying with the general requirements of the test).

The initial draft had 111 items, compiled in the specific section (94 items) and the general section (17 items) using the Delphi method. Specific items were scored on a 5-point Likert scale: 1) Does not comply with the guidelines at all, 2) Does not comply with the guidelines in many cases, 3) Does not comply with the guidelines in some cases, 4) Is acceptable but needs to be improved, and 5) It is favorable and following the instructions. To determine quantitative face validity, the initial draft was sent to the group of experts, and they were asked to give each item a score of 1 to 5 according to its importance. In this evaluation, a score of 1 indicates the lowest, and a score of 5 indicates the highest level of importance. In the next step, items with an impact score of less than 1.5 were removed, and the structure of other items was reviewed and revised. CVR and CVI were used to determine the content validity of the tool. The final items of the tool were sent to a group of 10 trainers and examiners, and they were asked to evaluate each item in terms of three aspects, including “necessary”, “helpful but not necessary”, and “not necessary”, to determine the CVR of each item. According to the minimum acceptable CVR based on the number of experts, items with a CVR score of less than 0.6 were excluded. To calculate the CVI of each item, experts were asked to determine the degree of clarity, simplicity, and relevance of each item with a 4-part spectrum. If the CVI of an item was less than 0.7, the item was removed; if it was between 0.7 and 0.79, it was revised; and if it was greater than 0.79, it was considered acceptable.

Determining the reliability of the tool was implemented in three stages. Six complete videos of performing the Bayley test of six age groups (3-4 months, 5-6 months, 12 months, 20 months, 31-32 months, and 42 months) were prepared and sent to 8 trainers.

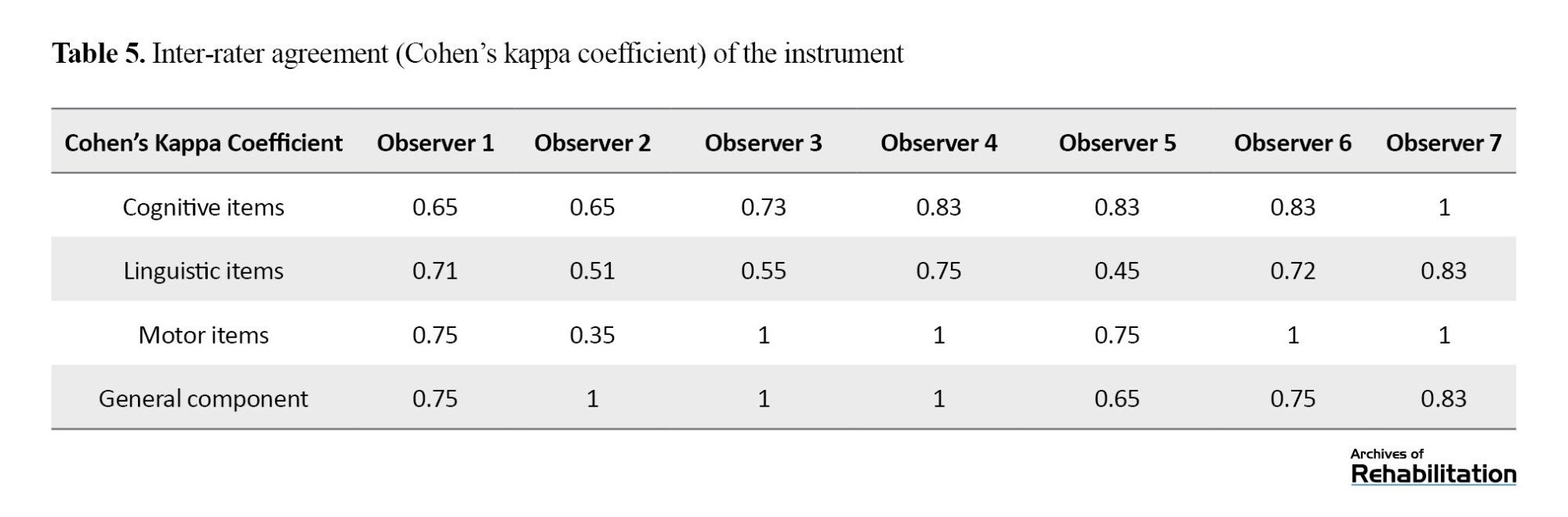

In the first step, to determine the reliability of the tests, the evaluators were asked to evaluate the films twice, with a 2-week interval (without referring to the results of the first time), and complete the checklist (test re-test). The scores of the evaluators were summarized and subjected to statistical analysis by calculating the intra-cluster correlation coefficient (ICC).

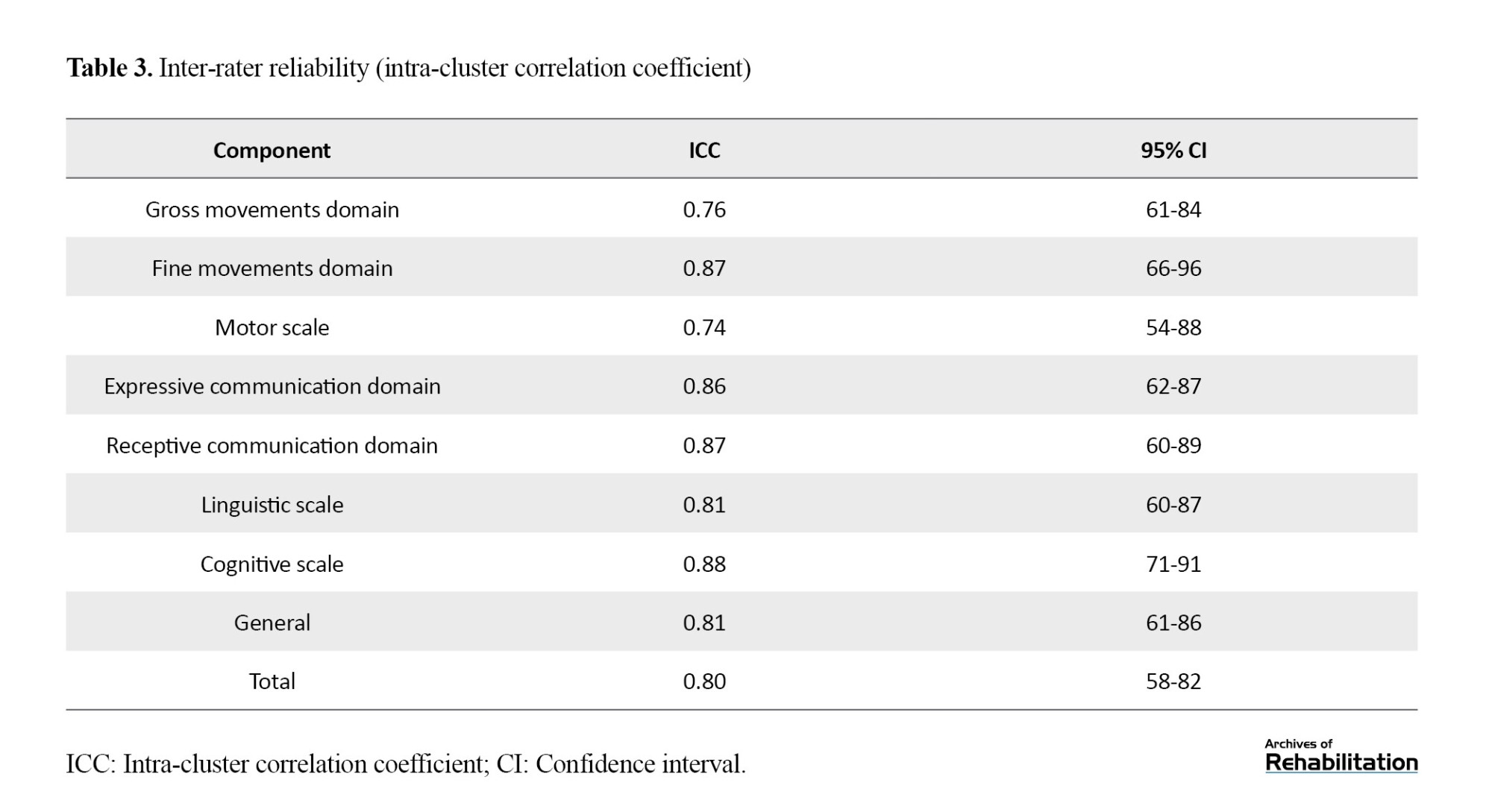

In the second step, ICC was used to determine the instrument’s reliability between evaluators with a confidence interval (CI) of 95%. According to studies, ICC values less than 0.5 indicate poor reliability, values between 0.5 and 0.75 indicate moderate reliability, values between 0.75 and 0.90 indicate good reliability and values above 0.9 indicate an excellent estimate of reliability [17].

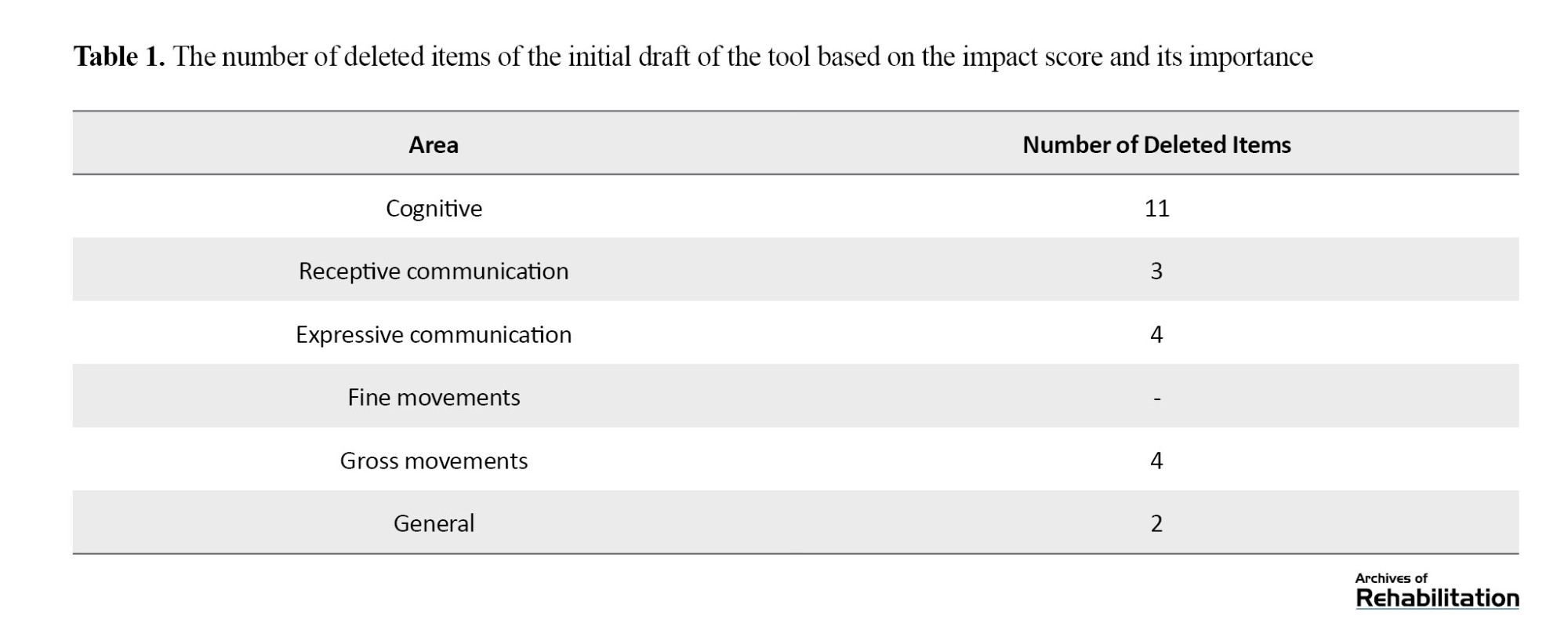

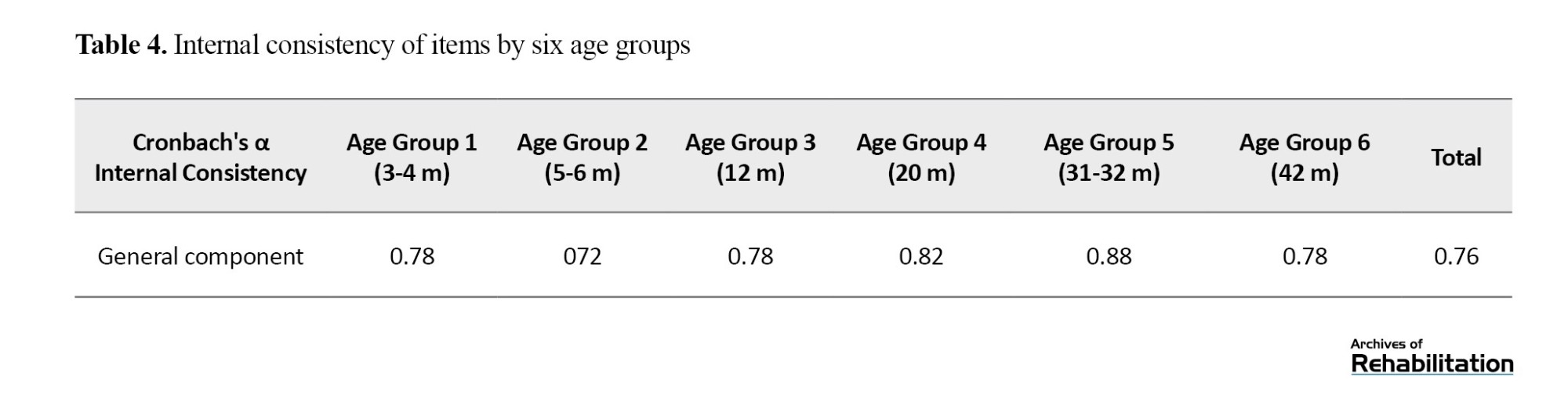

In the third step, the Cronbach's α coefficient was used to check the internal consistency of the items. This coefficient ranges between 0 and 1, and the values closer to 1 indicate that the instrument under study has a higher internal consistency. The acceptable Cronbach's α coefficient is often considered more than 0.70.

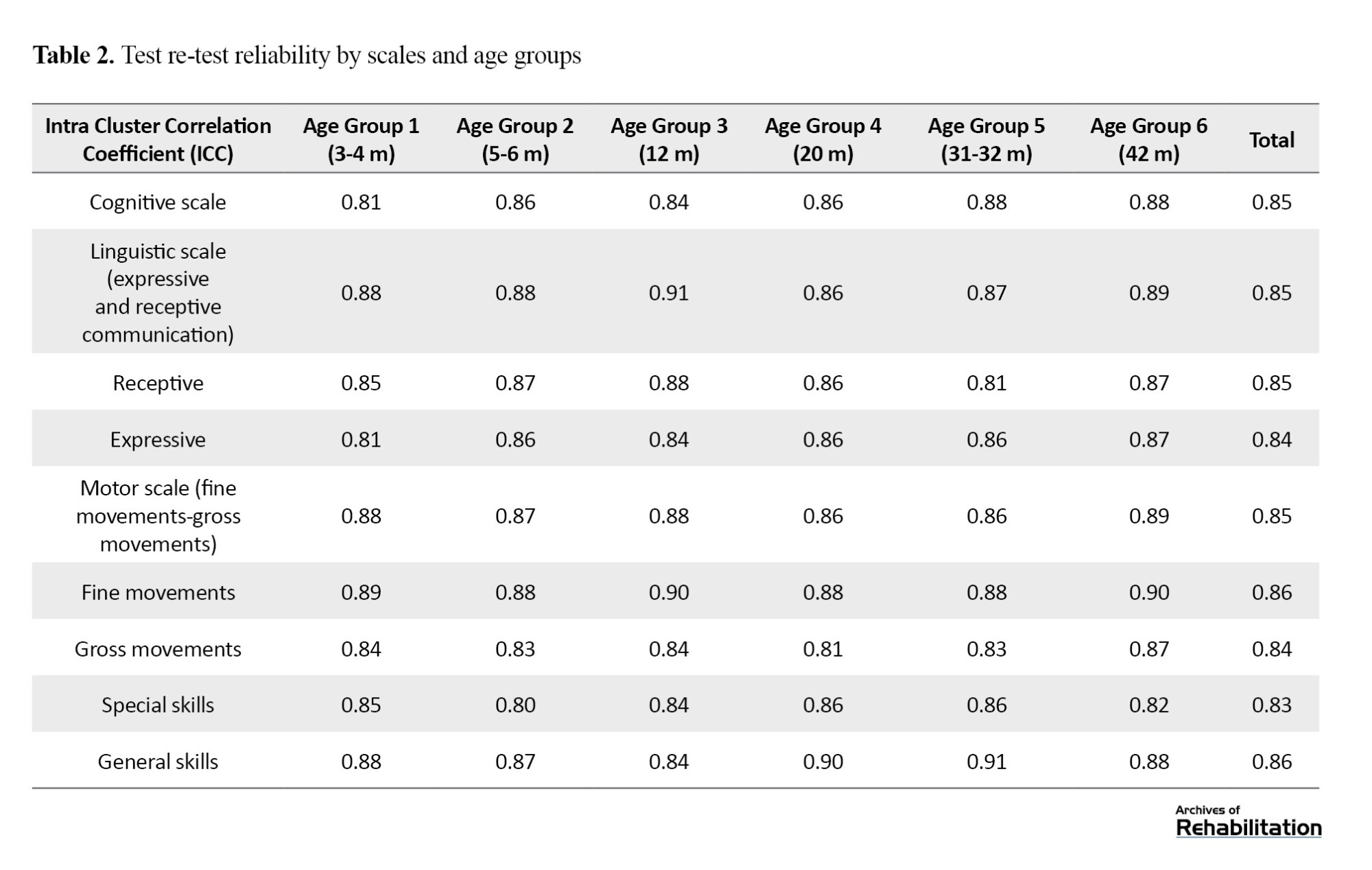

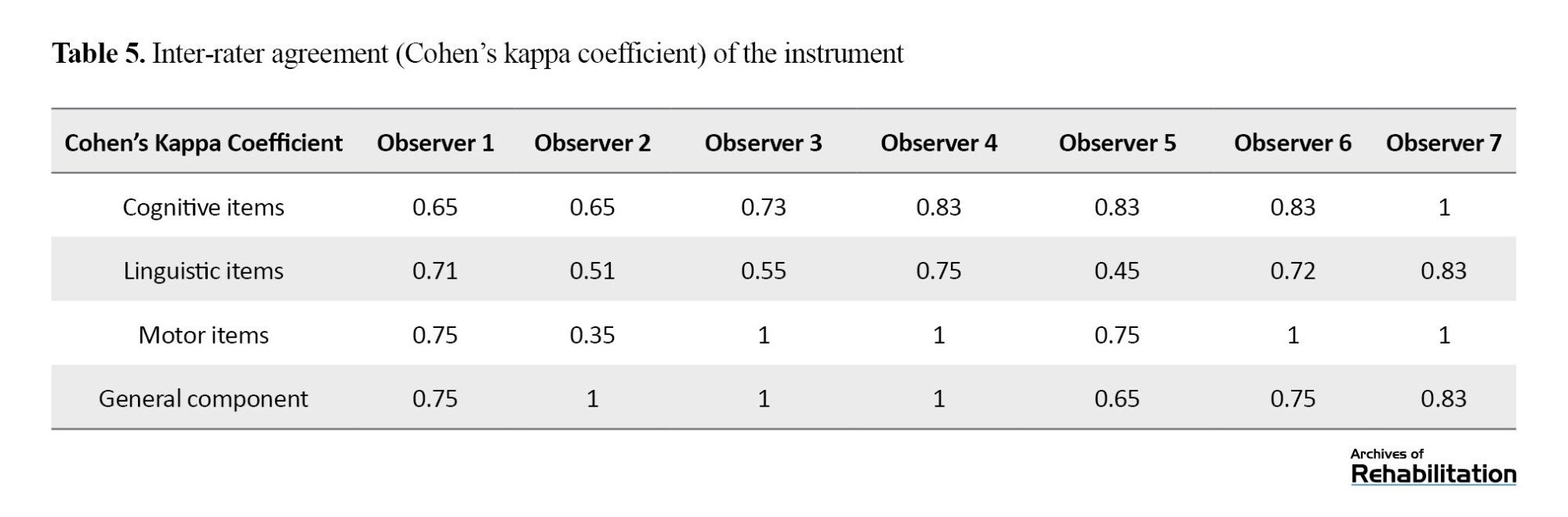

Finally, the Cohen kappa coefficient was used to determine the inter-rater agreement between each observer and the reference observer. The size of the kappa coefficient in statistical analysis ranges from -1 to +1. The closer this number is to 1, the more proportional the agreement is, and a kappa value above 0.6 is acceptable [18].

Results

Quantitative face validity

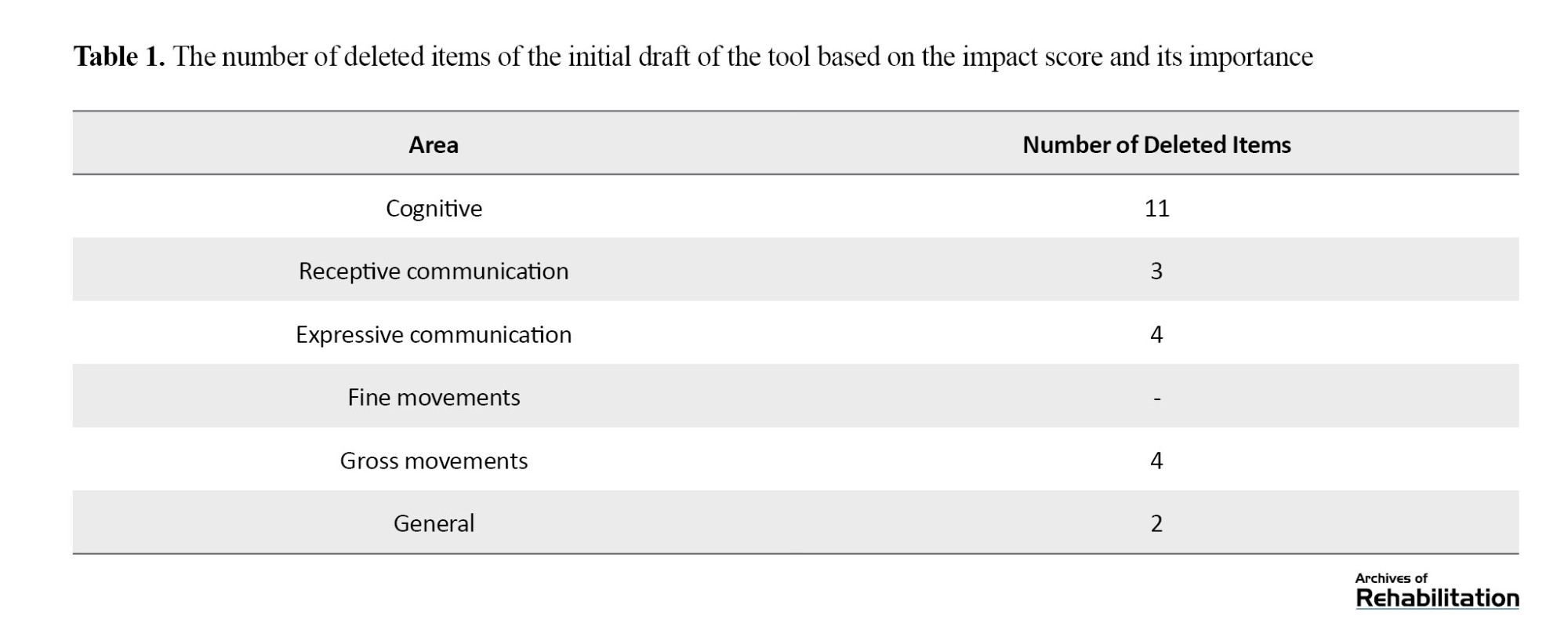

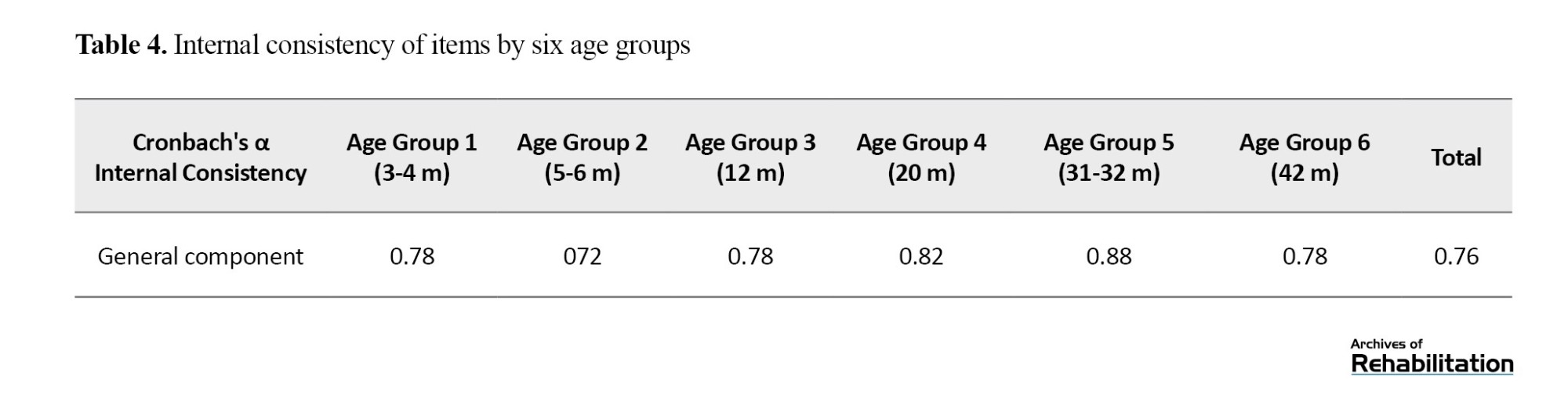

Based on the analysis of Likert scores, the items with an impact score of less than 4 (11 items from the cognitive part, 3 items from receptive communication, 4 items from expressive communication, 4 items from gross movements, and 2 items from the general section) were removed. The structure of other items was reviewed and revised (Table 1).

Content validity

According to the minimum acceptable CVR based on the number of experts (10 people), none of the items had a CVR less than 0.6, and no item was deleted. Based on the analysis of CVI scores, the items whose content validity index is less than 0.7 should be removed, and the items between 0.7 and 0.79 should be revised. In this study, all items had a content validity index higher than 0.79, except for item 29 of the cognitive scale, whose content validity index was equal to 0.7 and was revised.

The final tool was compiled with 66 items in the specific section (including 32 cognitive items, 13 receptive communication items, 9 expressive communication items, 9 fine movements items, and 3 gross movements items) and 14 items in the general section.

Reliability

The reliability of the tool was measured in two parts:

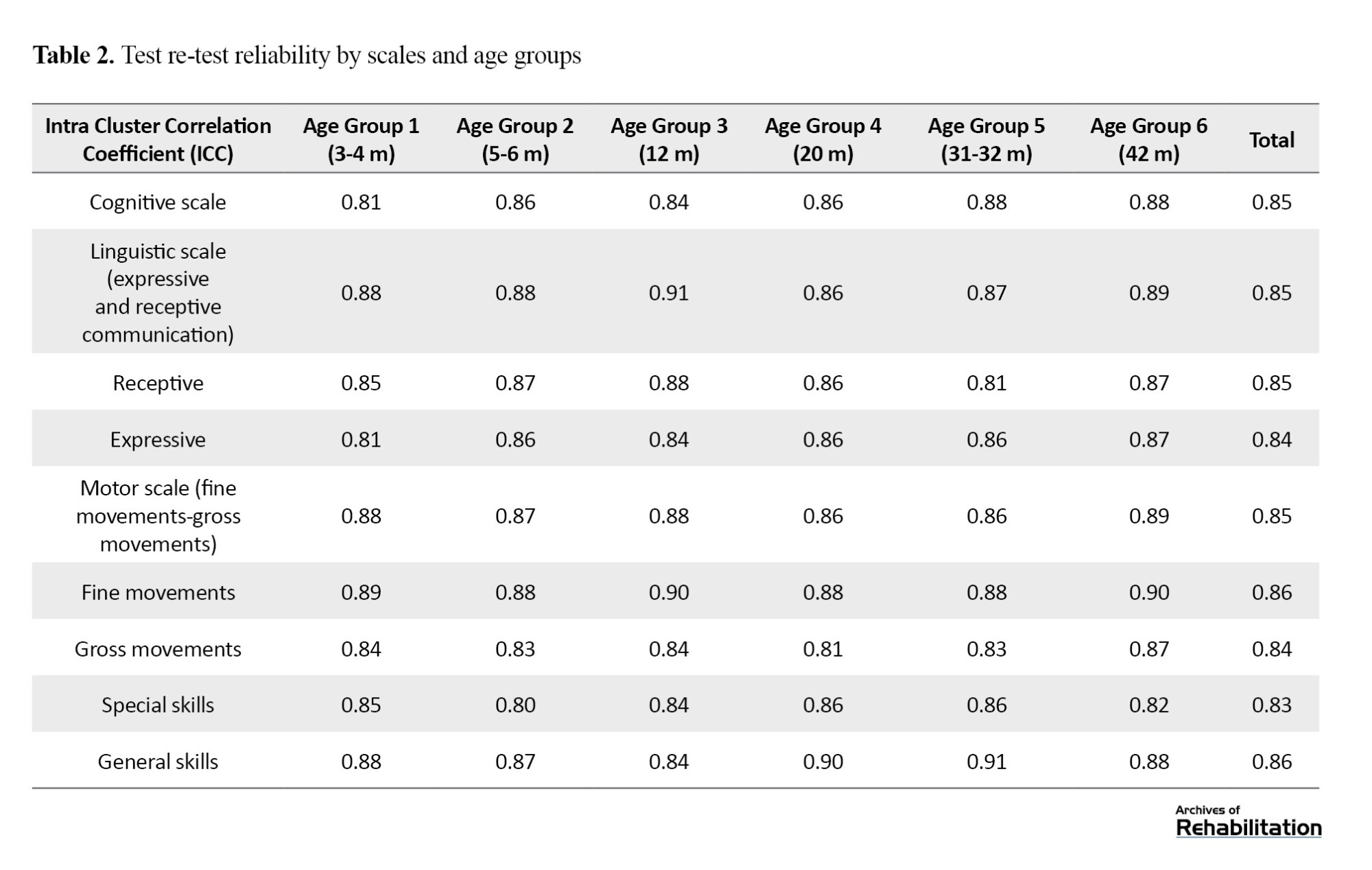

1. Time consistency (test re-test) with the ICC method was calculated for 6 age groups and two sections, including cognitive linguistic (receptive and expressive communication), movement (fine and gross movements), and general sections (Table 2). The ICC rate in the specific and general sectors was 0.83 and 0.86, respectively.

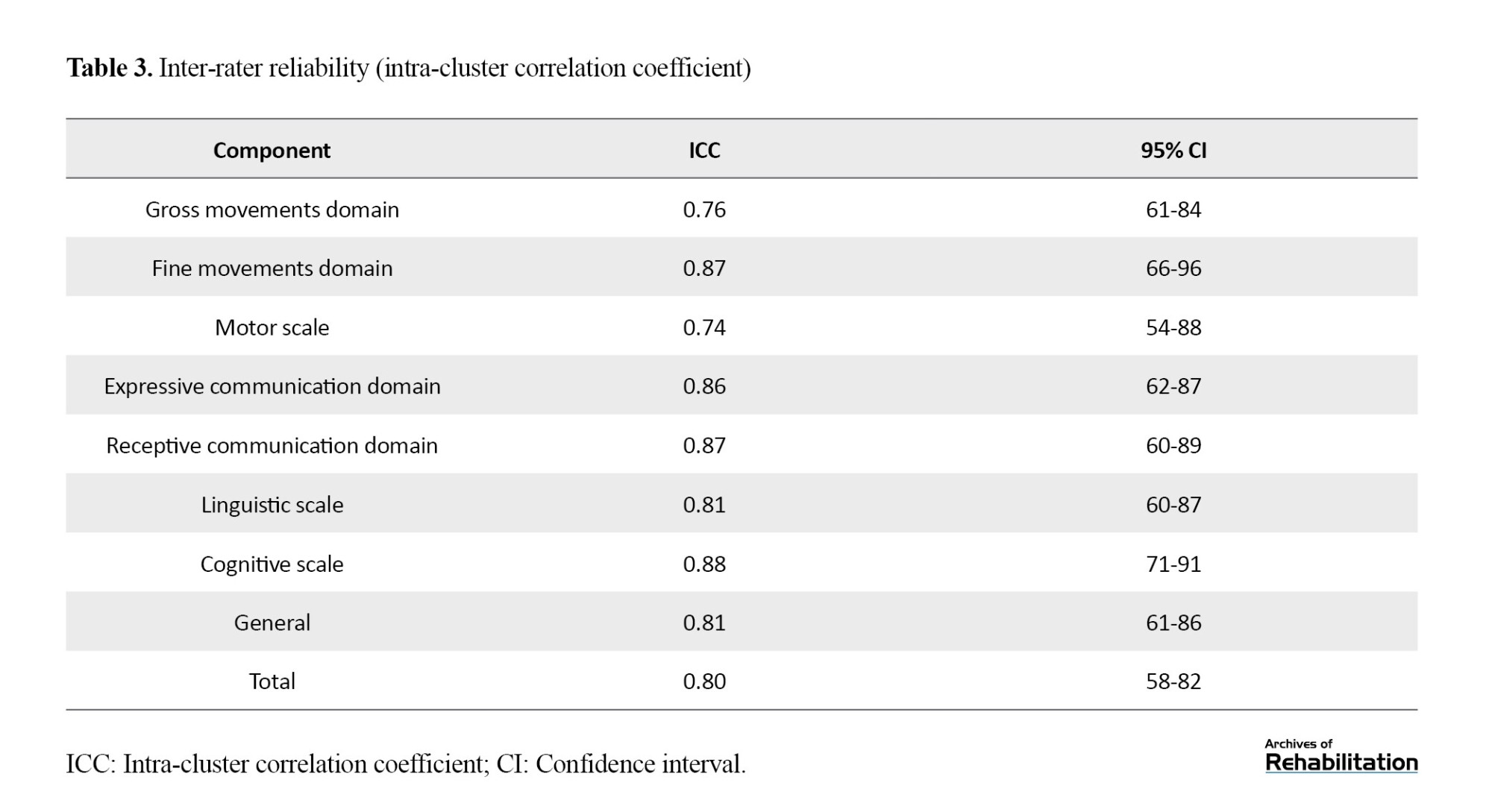

2. Inter-rater reliability by ICC method with 95% CI (Table 3) for movement items was 0.76 (95% CI, 54%-88%), linguistic items 0.81 (95% CI, 60%, 87%), cognitive items 0.88 (95% CI, 71%, 91%), the general section 0.81 (95% CI, 61%, 86%), and 0.80 for the whole instrument (95% CI, 58%, 82%).

3. The internal consistency of the items was calculated by the Cronbach's α obtained at 0.76 for all the items in the general section of the tool (Table 4).

4. The degree of agreement between 7 observers and the reference observer was calculated with the Cohen kappa coefficient. The highest rate was in movement items, and the general section in 3 cases, the cognitive scale in 1 case (equal to 1), and the lowest rate was in observer number 2 (Table 5). Generally, the Cohen kappa coefficient in different scales indicates the appropriate agreement between each observer and the reference observer.

Discussion

Studies show that a standard tool is essential for evaluating the skills of training recipients, and evaluation with a standard tool minimizes the possibility of error and the bias of the evaluator’s opinions. Finally, it improves the quality of the evaluation. Therefore, this study was conducted for the first time in Iran to design a valid and reliable tool to evaluate the clinical performance of the Bayley test examiner at the request of the Ministry of Health and Medical Education in Iran.

The review of domestic and foreign studies showed no tool specifically designed to evaluate the clinical performance of the Bayley tester. For this reason, similar tools have been investigated.

In a research conducted by Shayan et al. [3] to design and compile the logbook of 22 healthcare worker learners in Iran, a questionnaire with 17 modules and 205 skills was developed based on the approved curriculum of healthcare workers. The opinions of experts confirmed the face and content validity of the questionnaire. To determine the reliability of the questionnaire using the Cronbach's α, only the internal consistency of the items was evaluated, and the test re-test and inter-rater reliability were not measured [3]. Our study developed a tool consisting of specific (66 items) and general (14 items) sections according to the skills the examiner should have in evaluating children aged 1 to 42 months in the Bayley test. Similar to the structure of the Bayley test, the specific part was taken from the content of the test to measure cognitive, linguistic, and motor scales, and the general part included the items that the examiner must follow while performing the test. The steps of conducting the above study are carefully designed, but unlike our study, validity, reliability, and agreement between evaluators have not been calculated.

Another study was conducted in three stages by Mansourian et al. (2015), including the preparation of a checklist for evaluating the practical skills of dental students and determining its validity and reliability in the first stage, the application of the checklist in the second stage, and examination of the results in the third stage. First, according to the goals in the approved educational curriculum, a draft of the checklist, including clinical skills, was prepared. To determine its validity, the checklist was approved by 5 professors and lecturers. The Lawshe method was used to check the content validity of the instrument. The test re-test method was used to check the reliability of the checklist. A video of performing the desired clinical skill was prepared and provided to 5 professors. Two weeks later, the same professors reviewed and graded the film. The correlation coefficient of the test and re-test scores was equal to 0.98. [4]. In this study, as in our study, the content validity and reliability of the checklist have been determined using exact statistical methods. In our study, the ICC at two times (test and re-test) was 0.83 in the specific sector and 0.86 in the general sector, and the ICC in the evaluators’ observations (95% CI, 82%, 58%) was 0.80.

In another study conducted by Mokarami H et al. (2019) to design a tool to evaluate the internship course in the field of occupational health engineering, during 4 sessions, three areas necessary for a graduate of this field were defined. The initial questionnaire was designed with 44 items. In the next step, the importance of each item was checked by 10 experts. CVR and CVI were used to check quantitative content validity. The average CVI was equal to 0.85, the average CVR was equal to 0.76, and the impact scores of all the items of the tool were higher than 1.5. Internal consistency and test re-test methods were utilized to determine the reliability of the checklist. Finally, the final questionnaire included three fields and a total of 40 items. The Cronbach's α coefficient of the final questionnaire was 0.835 [5]. It is worth mentioning that this study seeks to evaluate an internship course from an educational level. Therefore, it considers three areas: Education and learning, behavioral and management goals. However, in our study, the competence of the testers in conducting an observational test was considered. The specific part of our tool also corresponds to the educational and learning scope of the above study, and the general part also corresponds to the behavioral goals in the above research.

Another study was conducted by Yaghini et al. [6] to design a tool for medical students’ clinical competence to determine the minimum training and the number of clinical encounters during the pediatric internship. First, the educational minimums of the pediatric department of general medicine were extracted from the logbook. Then, these educational minimums were designed as a checklist using experts’ opinions. In the second step, the face and content validity of the checklist was confirmed by the faculty members of the pediatrics department. To confirm the reliability, this checklist was used to determine the number of clinical encounters, and the reliability of this checklist was calculated with the Cronbach's α coefficient at 0.82 [6]. It is noteworthy that in this study, the method of determining face and content validity and reliability is not clear and detailed.

Another study was conducted by Sahebalzamani et al. [19] (2012) to investigate the validity and reliability of using the direct observation of practical skills to evaluate the clinical skills of nursing students. A list of practical nursing procedures was provided to 45 professionals to determine content validity. Then, the evaluation checklist was developed. To evaluate inter-observer reliability, 20 students were assessed by two testers. The Cronbach's α coefficient was used to determine reliability by the total internal consistency method. The reliability of the test was measured by Cronbach's α coefficient of 0.94. The lowest and highest correlation coefficient values in the reliability between evaluators were 0.42 and 0.84, respectively, which were significant in all cases (P=0.001) [19]. It is worth mentioning that in this study, 20 students were evaluated by two testers to assess the inter-observer reliability. It seems that the determination of reliability with the similarity of the opinion of only two examiners should be investigated.

Another study was conducted by Jabbari et al.[20] entitled “designing and determining the validity and reliability of the tool for evaluating the clinical competence of 24 occupational therapists”. In this research, the initial questionnaire was prepared with 128 items. After reviews and revisions by the expert group, the checklist was reduced to 66 items. The Lawshe method was used to check face and content validity, and CVR and CVI were used to determine content validity. The questionnaire was reduced from 66 items to 54 items. The correlation coefficient of two tests in two weeks was used to confirm the tool’s reliability. The Cronbach's α coefficient was also calculated to determine the internal correlation of the questionnaire. The cut-off point of this questionnaire was calculated as 162 [20]. The above study used a suitable method in designing and determining validity and reliability.

Conclusion

One of the limitations of our study was the small number of qualified trainers as evaluators in the country. Also, according to the structure of the Bayley test, it was impossible to measure the internal consistency in the specific section.

Considering that communication skills are an important part of health services and the ability to establish proper communication forms the basis of clinical practice, it is suggested that a suitable tool be designed to evaluate the communication skills of the Bayley tester.

So far, the evaluation of the clinical performance of the Bayley test examiner has been done only by watching the videos sent by the examiner and without a standard tool. Due to the appraiser’s opinions, the results are not acceptable. Therefore, for the first time, this study designed a comprehensive and valid tool to measure the clinical performance of the Bayley test examiner in Iran.

According to the qualitative stages of tool design, the tool designed to evaluate the clinical performance of the Bayley test examiner has the necessary validity and reliability.

Ethical Considerations

Compliance with ethical guidelines

Before starting the study, the ethical code number was obtained from the Ethics Committee of the University of Social Welfare and Rehabilitation Sciences (Code: IR.USWR.REC.1400.273). Written consent was obtained in simple and fluent language to film the children and send them to the expert group. The children’s parents were fully justified about the reason for filming and how to use these videos. The parents were assured that this consent was completely optional and they would not be deprived of the center’s services if they did not consent to filming.

Funding

This study was conducted with the financial support of the Children’s Health Department of the Ministry of Health and Medical Education.

Authors' contributions

Conceptualization, methodology, and writing the original draft: All authors; Validation, analysis, research, review, and sources: Farin Soleimani, Nahida Hasani Khiabani, and Leila Yazdi; Editing: Farin Soleimani and Nahida Hasani Khiabani; Project management: Farin Soleimani.

Conflict of interest

All authors declared no conflict of interest.

Acknowledgments

The authors would like to express their sincere gratitude to the Pediatric Neurorehabilitation Research Center, research vice president of Rehabilitation and Social Health Science University, Tabriz University of Medical Sciences vice president of health, Shahid Beheshti University of Medical Sciences vice president of health and mentors and examiners of the Bayley test of the countries medical sciences universities who participated as expert groups in the design of instrument items and evaluation of videos and also children's parents are appreciated for this project.

The value of early diagnosis of developmental disorders and providing intervention services for infants and toddlers has gained increasing significance. Timely and periodic evaluation of development offers the possibility of early diagnosis and treatment and prevents the loss of developmental potential of the child [7، 8]. The reason for increasing efforts in diagnosis at an early age is the cost-effectiveness and higher impact of intervention programs at this stage [9]. The early interventions improve children’s developmental prognosis [10] with short- and long-term positive effects [11، 12]. Currently, most specialists monitor a child’s development based on their clinical judgment, but several studies have shown that clinical judgment alone is ineffective in diagnosing developmental delays [9، 13، 14]. The evidence indicates that, on average, only 30% of children with developmental or behavioral problems are diagnosed by a doctor without a screening tool [11، 15، 16]. For this purpose, many developmental screening tests have been designed and provided to children’s caregivers. Diagnostic tests of developmental disorders should be used to definitively diagnose the cases referred to as developmental delay in the screening tests.

Compared to the several screening tests, such as the ages and stages questionnaires (ASQ-3) and the Denver developmental screener test (Denver-II), diagnostic tests for developmental disorders are limited and, in most cases, only diagnose developmental disorders in one area. These tests include Battelle developmental inventory screener (BDI-2), MacArthur-Bates short-forms (SFI and SFII) tests, and World Health Organization (WHO) motor milestones.

The “Bayley developmental scales” diagnostic test is one of the few globally valid diagnostic tests that, in addition to being comprehensive in all developmental areas, has high psychometric indicators. This test is an individual evaluation tool that assesses the developmental performance of children aged 1 to 42 months in 3 developmental domains: Cognitive, language, and motor. The evaluation of these three areas is done objectively by the examiner.

Therefore, caregivers in Iran’s health system have chosen and implemented Bayley’s test to diagnose and evaluate developmental disorders in children. To do this screening, first, the instructors train examiners to perform the test in a theoretical and practical training course. After completing the training course, the testers should practically implement the test in healthcare centers to acquire the necessary skills. They should send three videos of the test done on a healthy child to be evaluated by the instructors and get a certificate of skill in performing the test. Because there is no tool to assess the competence in conducting the test inside and outside the country, there have always been challenges in accepting or rejecting the clinical qualification of these testers by different trainers.

Therefore, the current research was conducted at the request of the Ministry of Health and Medical Education to design a standard instrument with acceptable validity and reliability to evaluate the clinical performance of Bayley test examiners.

Materials and Methods

This study was carried out in two parts: Building the tool and determining its validity and reliability in six steps. These steps are as follows: Conducting a review of the literature, explaining the concepts of clinical practice according to the results of the review of the literature and the opinions of experts, compiling the initial draft and the final items of the tool, adjusting the scoring structure and its interpretation, determining the face validity of the items by calculating the impact score, determining the quantitative content validity of the items by calculating the relative content validity ratio (CVR) and the content validity index (CVI).

The target population was the examiners of the Bayley test, and the statistical population was the trainers or evaluators. The inclusion criteria included national instructors and examiners with 5 years of experience in conducting the test. The exclusion criterion was an unwillingness to participate in the study. A purposive sampling was used to select the participants. The sample comprised 10 people for calculating face and content validity and 8 for internal consistency, inter-rater reliability, and test re-test.

After a focused group discussion with a team of experts, collecting their opinions, and reviewing the literature, we concluded that this concept includes two parts: Specific clinical performance (the performance of the examiner in implementing the test items according to the instructions in three cognitive, linguistic, and motor scales) and general clinical performance (examiner’s performance in complying with the general requirements of the test).

The initial draft had 111 items, compiled in the specific section (94 items) and the general section (17 items) using the Delphi method. Specific items were scored on a 5-point Likert scale: 1) Does not comply with the guidelines at all, 2) Does not comply with the guidelines in many cases, 3) Does not comply with the guidelines in some cases, 4) Is acceptable but needs to be improved, and 5) It is favorable and following the instructions. To determine quantitative face validity, the initial draft was sent to the group of experts, and they were asked to give each item a score of 1 to 5 according to its importance. In this evaluation, a score of 1 indicates the lowest, and a score of 5 indicates the highest level of importance. In the next step, items with an impact score of less than 1.5 were removed, and the structure of other items was reviewed and revised. CVR and CVI were used to determine the content validity of the tool. The final items of the tool were sent to a group of 10 trainers and examiners, and they were asked to evaluate each item in terms of three aspects, including “necessary”, “helpful but not necessary”, and “not necessary”, to determine the CVR of each item. According to the minimum acceptable CVR based on the number of experts, items with a CVR score of less than 0.6 were excluded. To calculate the CVI of each item, experts were asked to determine the degree of clarity, simplicity, and relevance of each item with a 4-part spectrum. If the CVI of an item was less than 0.7, the item was removed; if it was between 0.7 and 0.79, it was revised; and if it was greater than 0.79, it was considered acceptable.

Determining the reliability of the tool was implemented in three stages. Six complete videos of performing the Bayley test of six age groups (3-4 months, 5-6 months, 12 months, 20 months, 31-32 months, and 42 months) were prepared and sent to 8 trainers.

In the first step, to determine the reliability of the tests, the evaluators were asked to evaluate the films twice, with a 2-week interval (without referring to the results of the first time), and complete the checklist (test re-test). The scores of the evaluators were summarized and subjected to statistical analysis by calculating the intra-cluster correlation coefficient (ICC).

In the second step, ICC was used to determine the instrument’s reliability between evaluators with a confidence interval (CI) of 95%. According to studies, ICC values less than 0.5 indicate poor reliability, values between 0.5 and 0.75 indicate moderate reliability, values between 0.75 and 0.90 indicate good reliability and values above 0.9 indicate an excellent estimate of reliability [17].

In the third step, the Cronbach's α coefficient was used to check the internal consistency of the items. This coefficient ranges between 0 and 1, and the values closer to 1 indicate that the instrument under study has a higher internal consistency. The acceptable Cronbach's α coefficient is often considered more than 0.70.

Finally, the Cohen kappa coefficient was used to determine the inter-rater agreement between each observer and the reference observer. The size of the kappa coefficient in statistical analysis ranges from -1 to +1. The closer this number is to 1, the more proportional the agreement is, and a kappa value above 0.6 is acceptable [18].

Results

Quantitative face validity

Based on the analysis of Likert scores, the items with an impact score of less than 4 (11 items from the cognitive part, 3 items from receptive communication, 4 items from expressive communication, 4 items from gross movements, and 2 items from the general section) were removed. The structure of other items was reviewed and revised (Table 1).

Content validity

According to the minimum acceptable CVR based on the number of experts (10 people), none of the items had a CVR less than 0.6, and no item was deleted. Based on the analysis of CVI scores, the items whose content validity index is less than 0.7 should be removed, and the items between 0.7 and 0.79 should be revised. In this study, all items had a content validity index higher than 0.79, except for item 29 of the cognitive scale, whose content validity index was equal to 0.7 and was revised.

The final tool was compiled with 66 items in the specific section (including 32 cognitive items, 13 receptive communication items, 9 expressive communication items, 9 fine movements items, and 3 gross movements items) and 14 items in the general section.

Reliability

The reliability of the tool was measured in two parts:

1. Time consistency (test re-test) with the ICC method was calculated for 6 age groups and two sections, including cognitive linguistic (receptive and expressive communication), movement (fine and gross movements), and general sections (Table 2). The ICC rate in the specific and general sectors was 0.83 and 0.86, respectively.

2. Inter-rater reliability by ICC method with 95% CI (Table 3) for movement items was 0.76 (95% CI, 54%-88%), linguistic items 0.81 (95% CI, 60%, 87%), cognitive items 0.88 (95% CI, 71%, 91%), the general section 0.81 (95% CI, 61%, 86%), and 0.80 for the whole instrument (95% CI, 58%, 82%).

3. The internal consistency of the items was calculated by the Cronbach's α obtained at 0.76 for all the items in the general section of the tool (Table 4).

4. The degree of agreement between 7 observers and the reference observer was calculated with the Cohen kappa coefficient. The highest rate was in movement items, and the general section in 3 cases, the cognitive scale in 1 case (equal to 1), and the lowest rate was in observer number 2 (Table 5). Generally, the Cohen kappa coefficient in different scales indicates the appropriate agreement between each observer and the reference observer.

Discussion

Studies show that a standard tool is essential for evaluating the skills of training recipients, and evaluation with a standard tool minimizes the possibility of error and the bias of the evaluator’s opinions. Finally, it improves the quality of the evaluation. Therefore, this study was conducted for the first time in Iran to design a valid and reliable tool to evaluate the clinical performance of the Bayley test examiner at the request of the Ministry of Health and Medical Education in Iran.

The review of domestic and foreign studies showed no tool specifically designed to evaluate the clinical performance of the Bayley tester. For this reason, similar tools have been investigated.

In a research conducted by Shayan et al. [3] to design and compile the logbook of 22 healthcare worker learners in Iran, a questionnaire with 17 modules and 205 skills was developed based on the approved curriculum of healthcare workers. The opinions of experts confirmed the face and content validity of the questionnaire. To determine the reliability of the questionnaire using the Cronbach's α, only the internal consistency of the items was evaluated, and the test re-test and inter-rater reliability were not measured [3]. Our study developed a tool consisting of specific (66 items) and general (14 items) sections according to the skills the examiner should have in evaluating children aged 1 to 42 months in the Bayley test. Similar to the structure of the Bayley test, the specific part was taken from the content of the test to measure cognitive, linguistic, and motor scales, and the general part included the items that the examiner must follow while performing the test. The steps of conducting the above study are carefully designed, but unlike our study, validity, reliability, and agreement between evaluators have not been calculated.

Another study was conducted in three stages by Mansourian et al. (2015), including the preparation of a checklist for evaluating the practical skills of dental students and determining its validity and reliability in the first stage, the application of the checklist in the second stage, and examination of the results in the third stage. First, according to the goals in the approved educational curriculum, a draft of the checklist, including clinical skills, was prepared. To determine its validity, the checklist was approved by 5 professors and lecturers. The Lawshe method was used to check the content validity of the instrument. The test re-test method was used to check the reliability of the checklist. A video of performing the desired clinical skill was prepared and provided to 5 professors. Two weeks later, the same professors reviewed and graded the film. The correlation coefficient of the test and re-test scores was equal to 0.98. [4]. In this study, as in our study, the content validity and reliability of the checklist have been determined using exact statistical methods. In our study, the ICC at two times (test and re-test) was 0.83 in the specific sector and 0.86 in the general sector, and the ICC in the evaluators’ observations (95% CI, 82%, 58%) was 0.80.

In another study conducted by Mokarami H et al. (2019) to design a tool to evaluate the internship course in the field of occupational health engineering, during 4 sessions, three areas necessary for a graduate of this field were defined. The initial questionnaire was designed with 44 items. In the next step, the importance of each item was checked by 10 experts. CVR and CVI were used to check quantitative content validity. The average CVI was equal to 0.85, the average CVR was equal to 0.76, and the impact scores of all the items of the tool were higher than 1.5. Internal consistency and test re-test methods were utilized to determine the reliability of the checklist. Finally, the final questionnaire included three fields and a total of 40 items. The Cronbach's α coefficient of the final questionnaire was 0.835 [5]. It is worth mentioning that this study seeks to evaluate an internship course from an educational level. Therefore, it considers three areas: Education and learning, behavioral and management goals. However, in our study, the competence of the testers in conducting an observational test was considered. The specific part of our tool also corresponds to the educational and learning scope of the above study, and the general part also corresponds to the behavioral goals in the above research.

Another study was conducted by Yaghini et al. [6] to design a tool for medical students’ clinical competence to determine the minimum training and the number of clinical encounters during the pediatric internship. First, the educational minimums of the pediatric department of general medicine were extracted from the logbook. Then, these educational minimums were designed as a checklist using experts’ opinions. In the second step, the face and content validity of the checklist was confirmed by the faculty members of the pediatrics department. To confirm the reliability, this checklist was used to determine the number of clinical encounters, and the reliability of this checklist was calculated with the Cronbach's α coefficient at 0.82 [6]. It is noteworthy that in this study, the method of determining face and content validity and reliability is not clear and detailed.

Another study was conducted by Sahebalzamani et al. [19] (2012) to investigate the validity and reliability of using the direct observation of practical skills to evaluate the clinical skills of nursing students. A list of practical nursing procedures was provided to 45 professionals to determine content validity. Then, the evaluation checklist was developed. To evaluate inter-observer reliability, 20 students were assessed by two testers. The Cronbach's α coefficient was used to determine reliability by the total internal consistency method. The reliability of the test was measured by Cronbach's α coefficient of 0.94. The lowest and highest correlation coefficient values in the reliability between evaluators were 0.42 and 0.84, respectively, which were significant in all cases (P=0.001) [19]. It is worth mentioning that in this study, 20 students were evaluated by two testers to assess the inter-observer reliability. It seems that the determination of reliability with the similarity of the opinion of only two examiners should be investigated.

Another study was conducted by Jabbari et al.[20] entitled “designing and determining the validity and reliability of the tool for evaluating the clinical competence of 24 occupational therapists”. In this research, the initial questionnaire was prepared with 128 items. After reviews and revisions by the expert group, the checklist was reduced to 66 items. The Lawshe method was used to check face and content validity, and CVR and CVI were used to determine content validity. The questionnaire was reduced from 66 items to 54 items. The correlation coefficient of two tests in two weeks was used to confirm the tool’s reliability. The Cronbach's α coefficient was also calculated to determine the internal correlation of the questionnaire. The cut-off point of this questionnaire was calculated as 162 [20]. The above study used a suitable method in designing and determining validity and reliability.

Conclusion

One of the limitations of our study was the small number of qualified trainers as evaluators in the country. Also, according to the structure of the Bayley test, it was impossible to measure the internal consistency in the specific section.

Considering that communication skills are an important part of health services and the ability to establish proper communication forms the basis of clinical practice, it is suggested that a suitable tool be designed to evaluate the communication skills of the Bayley tester.

So far, the evaluation of the clinical performance of the Bayley test examiner has been done only by watching the videos sent by the examiner and without a standard tool. Due to the appraiser’s opinions, the results are not acceptable. Therefore, for the first time, this study designed a comprehensive and valid tool to measure the clinical performance of the Bayley test examiner in Iran.

According to the qualitative stages of tool design, the tool designed to evaluate the clinical performance of the Bayley test examiner has the necessary validity and reliability.

Ethical Considerations

Compliance with ethical guidelines

Before starting the study, the ethical code number was obtained from the Ethics Committee of the University of Social Welfare and Rehabilitation Sciences (Code: IR.USWR.REC.1400.273). Written consent was obtained in simple and fluent language to film the children and send them to the expert group. The children’s parents were fully justified about the reason for filming and how to use these videos. The parents were assured that this consent was completely optional and they would not be deprived of the center’s services if they did not consent to filming.

Funding

This study was conducted with the financial support of the Children’s Health Department of the Ministry of Health and Medical Education.

Authors' contributions

Conceptualization, methodology, and writing the original draft: All authors; Validation, analysis, research, review, and sources: Farin Soleimani, Nahida Hasani Khiabani, and Leila Yazdi; Editing: Farin Soleimani and Nahida Hasani Khiabani; Project management: Farin Soleimani.

Conflict of interest

All authors declared no conflict of interest.

Acknowledgments

The authors would like to express their sincere gratitude to the Pediatric Neurorehabilitation Research Center, research vice president of Rehabilitation and Social Health Science University, Tabriz University of Medical Sciences vice president of health, Shahid Beheshti University of Medical Sciences vice president of health and mentors and examiners of the Bayley test of the countries medical sciences universities who participated as expert groups in the design of instrument items and evaluation of videos and also children's parents are appreciated for this project.

References

- Parsa Yekta Z, Ahmadi F, Tabari R. [Factors defined by nurses as influential upon the development of clinical competence (Persian)]. Journal OF Guilan University of Medcical Sciences. 2005; 14(54):9-23. [Link]

- Carr SJ. Assessing clinical competency in medical senior house officers: How and why should we do it? Postgraduate Medical Journal. 2004; 80(940):63-6. [DOI:10.1136/pmj.2003.011718] [PMID]

- Shayan S, Rafieyan M, Kazemi M. [Design and develop Logbook student’s nationwide health providers training courses of the entire country (Persian)]. Teb va Tazkie. 2018; 27(2):124-32. [Link]

- Mansourian A, Shirazian S, Jalili M, Vatanpour M, Arabi LPM. [Checklist development for assessing the dental students' clinical skills in oral and maxillofacial medicine course and comparison with global rating (Persian)]. Journal of Dental Medicine. 2016; 2016; 29(3):169-76. [Link]

- Mokarami H, Javid AB, Zaroug Hossaini R, Barkhordari A, Gharibi V, Jahangiri M, et al. [Developing and validating tool for assessing the field internship course in the field of occupational health engineering (Persian)]. Iran Occupational Health. 2019; 16(3):58-70. [Link]

- Yaghini O, Parnia A, Monajemi A, Daryazadeh S. [Designing a tool to assess medical students’ clinical competency in pediatrics (Persian)]. Research in Medical Education. 2018; 10(1):39-47. [DOI:10.29252/rme.10.1.39]

- Briggs-Gowan MJ, Carter AS, Irwin JR, Wachtel K, Cicchetti DV. The brief infant-toddler social and emotional assessment: Screening for social-emotional problems and delays in competence. Journal of Pediatric Psychology. 2004; 29(2):143-55. [DOI:10.1093/jpepsy/jsh017] [PMID]

- Halfon N, Regalado M, Sareen H, Inkelas M, Reuland CH, Glascoe FP, et al. Assessing development in the pediatric office. Pediatrics. 2004; 113(6 Suppl):1926-33. [DOI:10.1542/peds.113.S5.1926] [PMID]

- Rydz D, Srour M, Oskoui M, Marget N, Shiller M, Birnbaum R, et al. Screening for developmental delay in the setting of a community pediatric clinic: A prospective assessment of parent-report questionnaires. Pediatrics. 2006; 118(4):e1178-86. [DOI:10.1542/peds.2006-0466] [PMID]

- Mayson TA, Harris SR, Bachman CL. Gross motor development of Asian and European children on four motor assessments: A literature review. Pediatric Physical Therapy. 2007; 19(2):148-53.[DOI:10.1097/PEP.0b013e31804a57c1] [PMID]

- Soleimani F, Dadkhah A. Validity and reliability of Infant Neurological International Battery for detection of gross motor developmental delay in Iran. Child. 2007; 33(3):262-5. [DOI:10.1111/j.1365-2214.2006.00704.x] [PMID]

- Levine DA. Guiding parents through behavioral issues affecting their child's health: The primary care provider's role. Ethnicity & Disease. 2006; 16(2 Suppl 3):S3-21-8. [PMID]

- Ristovska L, Jachova Z, Trajkovski V. Early detection of developmental disorders in primary health care. Paediatria Croatica. 2014; 58:8-14. [DOI:10.13112/PC.2014.2]

- Marks K, Hix-Small H, Clark K, Newman J. Lowering developmental screening thresholds and raising quality improvement for preterm children. Pediatrics. 2009; 123(6):1516-23. [DOI:10.1542/peds.2008-2051] [PMID]

- King TM, Glascoe FP. Developmental surveillance of infants and young children in pediatric primary care. Current Opinion in Pediatrics. 2003; 15(6):624-9. [DOI:10.1097/00008480-200312000-00014] [PMID]

- Sand N, Silverstein M, Glascoe FP, Gupta VB, Tonniges TP, O'Connor KG. Pediatricians' reported practices regarding developmental screening: Do guidelines work? Do they help? Pediatrics. 2005; 116(1):174-9. [DOI:10.1542/peds.2004-1809] [PMID]

- Koo TK, Li MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of Chiropractic Medicine. 2016; 15(2):155-63. [DOI:10.1016/j.jcm.2016.02.012] [PMID]

- McHugh ML. Interrater reliability: The kappa statistic. Biochemia Medica. 2012; 22(3):276-82. [DOI:10.11613/BM.2012.031] [PMID]

- Sahebalzamani M, Farahani H. [Validity and reliability of direct observation of procedural skills in evaluating the clinical skills of nursing students of Zahedan nursing and midwifery school (Persian)]. Zahedan Journal of Research in Medical Sciences. 2012; 14(2):e93588. [Link]

- Jabbari A, Hosseini MA, Fatoureh-Chi S, Hosseini A, Farzi M. [Designing a valid & reliable tool for assessing the occupational therapist’s clinical competency (Persian)]. Archives of Rehabilitation. 2014; 14(4):44-9. [Link]

Type of Study: Applicable |

Subject:

Neurology

Received: 8/12/2022 | Accepted: 16/07/2023 | Published: 1/01/2024

Received: 8/12/2022 | Accepted: 16/07/2023 | Published: 1/01/2024

Send email to the article author

| Rights and permissions | |

|

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License. |